Medicine and Health

Economic evaluation for medical artificial intelligence: accuracy vs. cost-effectiveness in a diabetic retinopathy screening case

Y. Wang, C. Liu, et al.

Artificial intelligence has growing potential in early disease detection through medical image analysis and is increasingly used in health screening. Diabetic retinopathy (DR) screening is a prime use case, where many AI products exhibit high diagnostic accuracy. Yet, cost-effectiveness in long-running, real-world screening is often underestimated, and the trade-off between diagnostic performance (sensitivity versus specificity) and economic value has not been adequately addressed. Increasing sensitivity identifies more high-risk patients but raises costs; increasing specificity reduces unnecessary referrals but risks missed cases. Prior studies varied AI sensitivity and specificity independently in theoretical analyses, overlooking the practical inverse correlation between them in real tools. This study asks whether the best-performing AI model is also the most cost-effective in real-world screening and how to select among multiple accurate AI models considering regional disease prevalence and health system willingness-to-pay (WTP). Using China’s nationwide Lifeline Express DR Screening Program data, we evaluate cost-effectiveness across a wide range of AI operating points to guide real-world AI selection and implementation.

Existing DR AI models report sensitivities of roughly 85–95% and specificities of 74–98%. Prior economic evaluations often manipulated sensitivity and specificity independently, not reflecting their inverse relationship on a receiver operating characteristic curve. Evidence on the real-world cost-effectiveness trade-off across AI operating points is limited. International minimum performance thresholds vary: the UK and Australia have recommended high specificity thresholds (often ≥95%) with variable sensitivity (60–80%), and the US FDA authorized an AI device with 85.0% sensitivity and 82.5% specificity. Previous studies in China largely compared single AI strategies versus manual screening and tended to favor very high specificity models, leaving a gap in understanding optimal sensitivity–specificity balances under varying prevalence and WTP conditions.

Design and perspective: A cost-effectiveness analysis was conducted from a societal perspective using real-world data from the Lifeline Express DR Screening Program in China. A hybrid decision tree/Markov model simulated annual AI-based DR screening over 30 one-year cycles in 251,535 adults with diabetes (mean age 60 years).

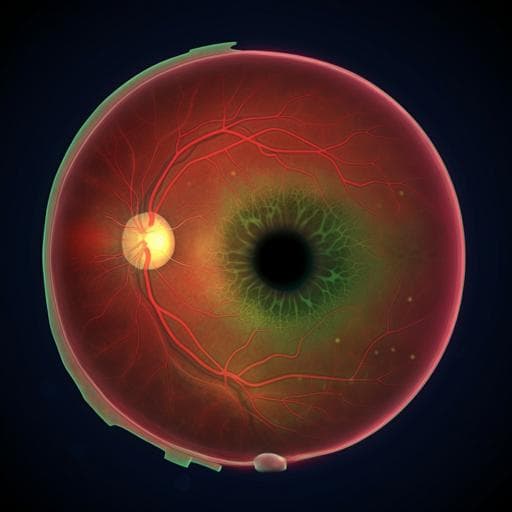

Screening and grading: Non-cycloplegic fundus photographs (two per eye: macula-centered and optic disc-centered) were taken using local devices (865,152 images total). DR severity was graded per NHS diabetic eye screening guidelines (R0, R1, R2, R3s, R3a). Referable DR was defined as R2 or worse; ungradable images were treated as positive for referral. Human grader adjudications across five central grading centers served as the reference standard.

AI model and operating points: A validated multi-class AI produced probabilities for the five DR grades. By grade-level threshold adjustments in Python 3.6, 1100 distinct sensitivity/specificity operating points along the ROC were generated (first step: thresholds for R2/R3s/R3a to reduce false positives; second step: thresholds for R0/R1 to reduce false negatives). The status quo scenario was the operating point with maximum AUC (sensitivity 93.3%, specificity 87.7%, AUC 0.933). Across all 1100 points, sensitivity ranged from 69.8% to 99.4% and specificity from 31.9% to 92.6%.

Model structure: The decision tree captured annual screening, referral, and treatment decisions. A Markov model tracked five health states: non-referable DR, referable DR, treated referable DR, blindness, and death. Annual transitions included progression from non-referable to referable DR, treatment of referable DR, and progression to blindness from referable or treated referable states; age-adjusted mortality applied in all states.

Key assumptions and parameters: Baseline referable DR prevalence was 7.44% (program estimate). Referral compliance was assumed at 50% and treatment compliance at 70%, reflecting program data and literature. Transition probabilities were derived from Chinese studies; when necessary, incidence/progression rates were converted to annual probabilities using p(t) = 1 − e^(−rt). Mortality was based on Chinese life tables with disease-specific hazard ratios from literature.

Costs: Costs (2019 USD) included direct medical (screening program operations, personnel, examinations, treatments), direct non-medical (transportation, food), and indirect costs (income loss for patients/caregivers; no income loss for patients ≥60 years, caregiver loss still counted). AI deployment costs included model development/running and platform maintenance ($0.214 per participant). Screening cost per participant was estimated at $10.65. Examination, treatment (laser and anti-VEGF as indicated), and blindness costs (initial and follow-up yearly indirect costs) were included; a 3% annual discount rate was applied. Rural participants had higher transportation costs and lower indirect costs; urban participants had higher indirect costs. Currency conversion used $1 = ¥6.8968.

Effectiveness: QALYs were computed using utility values for non-referable DR, referable DR, treated referable DR, and blindness based on Chinese studies, with a 3.5% annual discount rate per NICE recommendations. Utilities for non-referable DR were weighted by the R0:R1 ratio (5:1).

Comparators and outcomes: Each of the 1099 intervention operating points was compared to the status quo and to reference scenarios (lower-cost non-dominated scenario and no screening). ICER = incremental cost / incremental QALYs. WTP was set at 3× per-capita GDP for China ($30,828; 2019). Scenarios with ICER < WTP or with lower cost and higher effectiveness than comparators were considered cost-effective. Net monetary benefit (NMB) was calculated as (incremental QALYs × WTP) − incremental cost. The best cost-effective scenario (BCES) was defined as the cost-effective scenario with the highest effectiveness.

Sensitivity and subgroup analyses: One-way sensitivity analyses varied prevalence, utilities, compliance, and transition probabilities by ±10% and costs by ±20%. Probabilistic sensitivity analysis used 10,000 Monte Carlo simulations (beta distributions for probabilities/utilities, gamma for costs). Subgroup analyses evaluated rural vs urban (setting-specific prevalence, costs, compliance, and WTP: $25,751 rural; $37,259 urban) and seven age groups (20–29 to 80–89 years), using setting- or age-specific prevalence. Alternative prevalence scenarios (4%, 8%, 12%) and WTP values ($0–$30,828) were also modeled.

Status quo performance (most accurate): sensitivity 93.3%, specificity 87.7% (AUC 0.933). Over 30 years with annual AI screening for 251,535 people, total cost ≈ $1,563 million, with 9.1689 QALYs per person. Across 1100 operating points, sensitivity ranged 69.8–99.4% and specificity 31.9–92.6%. Cost-effectiveness landscape: After excluding dominated and extendedly dominated scenarios and those above the WTP threshold, 14 scenarios remained cost-saving or cost-effective versus status quo (6 cost-saving, 7 cost-effective, plus status quo). Minimum performance to be cost-saving or cost-effective was ≥88.2% sensitivity and ≥80.4% specificity (as observed in the selected scenarios). The most cost-saving scenario had sensitivity/specificity 88.2%/90.3%, saving $5.54 million but losing 1,490 QALYs compared to status quo. The best cost-effective scenario (BCES) had sensitivity/specificity 96.3%/80.4% and delivered the highest effectiveness among cost-effective options, gaining 839 QALYs at an additional $14.834 million (ICER well below WTP; NMB positive). Small increases in sensitivity improved cost-effectiveness even when specificity substantially decreased. Prevalence and WTP effects: As referable DR prevalence rose from 4% to 12%, the BCES required higher sensitivity (paired with lower specificity) than status quo. Relative to status quo, BCES added ≈$11–16 million in costs and yielded an additional 662–974 QALYs (for the 251,535 cohort). Across WTP levels, higher WTP favored higher sensitivity; for WTP > $5,000, sensitivity should exceed specificity for optimal cost-effectiveness. Probabilistic analysis showed that at WTP = 3× GDP per capita ($30,828), the BCES (96.3%/80.4%) had a 55.43% probability of being the dominant choice; with lower WTP, BCES shifted toward lower sensitivity. Subgroups: Urban settings (higher prevalence, higher WTP and indirect costs) favored higher sensitivity; rural settings favored higher specificity. The BCES required sensitivity 94.7% (specificity 85.6%) in rural and 96.9% (specificity 77.5%) in urban populations. Even with BCES, urban settings achieved ~3.5× the QALY gain versus rural relative to status quo. Younger age groups required higher sensitivity for optimal cost-effectiveness.

The findings show that the most accurate AI operating point is not necessarily the most cost-effective in long-run DR screening. Prioritizing sensitivity generally enhances cost-effectiveness because missed referable DR increases risk of blindness and its substantial societal costs, particularly in LMIC contexts. However, when prevalence or WTP is lower, raising specificity mitigates unnecessary referrals and associated resource strain. The optimal balance depends on epidemiology and economic capacity: higher prevalence and WTP favor higher sensitivity even at the cost of more false positives. The study’s cost-effectiveness–oriented minimum performance thresholds for China (≥88% sensitivity and ≥80.4% specificity) are close to FDA-authorized thresholds and differ from settings emphasizing very high specificity, likely reflecting lower examination and treatment costs in China. Results suggest that countries with similar economic frameworks may adopt comparable strategies, although local validation is warranted. Compared with prior Chinese evaluations that emphasized high specificity, this analysis across 1100 operating points consistently found sensitivity-prioritized models to be most cost-effective, including in urban and younger populations. Real-world considerations, such as classifying ungradable images as positive (lowering specificity but aligning with practice), are important when interpreting AI performance and cost-effectiveness.

In nationwide DR screening, the most accurate AI model is not the most cost-effective. Independent cost-effectiveness evaluation is essential and is most influenced by sensitivity. For China’s context, AI should meet at least ~88% sensitivity and ~80% specificity to be cost-saving or cost-effective, with higher sensitivity required in settings with higher DR prevalence and higher WTP. Policymakers should select AI operating points tailored to local epidemiology, resources, and societal values, prioritizing sensitivity where blindness burden and WTP are higher. Future work will scale and validate these findings across regions and populations to refine AI selection strategies.

Only one validated AI system was used to generate operating points, though 1100 sensitivity/specificity pairs were simulated; generalizability to other AI systems, countries, and populations may be limited by China-specific costs and assumptions. The analysis did not model other referable conditions such as diabetic macular edema or impaired visual acuity due to data unavailability, simplifying DR natural history. Transition probabilities were derived from literature rather than observed longitudinal data from the program. Reclassification of ungradable images as positive lowered observed specificity but reflects real-world practice.

Related Publications

Explore these studies to deepen your understanding of the subject.