Medicine and Health

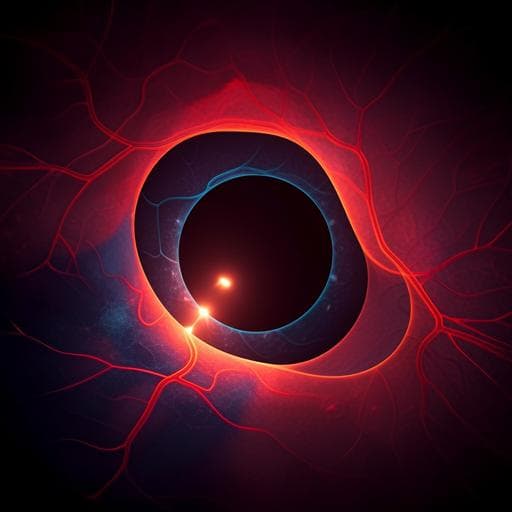

Automated detection of type 1 ROP, type 2 ROP and A-ROP based on deep learning

E. K. Yenice, C. Kara, et al.

This groundbreaking study by Eşay Kıran Yenice, Caner Kara, and Çağatay Berke Erdaş delves into the use of deep learning technology to detect various stages of retinopathy of prematurity with remarkable accuracy. By training a sophisticated RegNetY002 convolutional neural network on a large dataset, the researchers demonstrate how AI can enhance ophthalmologists' efficiency and improve patient outcomes in this critical area of neonatal care.

Related Publications

Explore these studies to deepen your understanding of the subject.