Computer Science

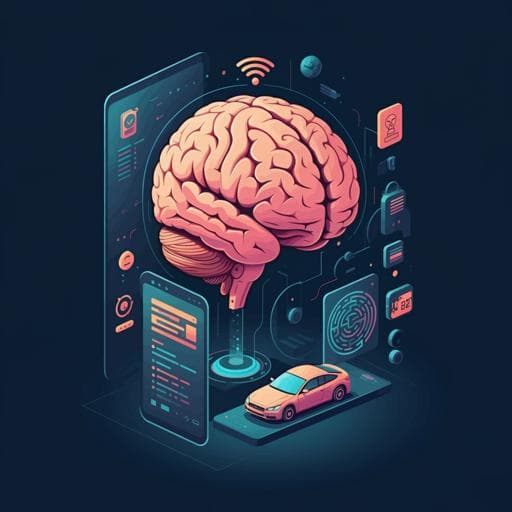

Wearable EEG electronics for a Brain–AI Closed-Loop System to enhance autonomous machine decision-making

J. H. Shin, J. Kwon, et al.

Recent bio-integrated wearable and implantable electronics enable tracking of physiological, electrophysiological, and chemical signals, facilitating human–machine interfaces (HMIs) integrated with IoT and AI. Brain–machine interfaces (BMIs) using EEG, LFPs, and other biosignals can allow interaction between brains and machines, but current approaches often require uncomfortable, conspicuous setups and simplified paradigms (e.g., flickering displays). A promising direction is bidirectional interaction in which AI understands human emotions or cognitive states and adapts decisions accordingly. Error-related potential (ErP)—including ERN, N200/FRN, and P300/Pe—arises after unexpected or erroneous events and can serve as implicit human feedback to accelerate reinforcement of AI decision-making without conscious user effort. However, existing systems suffer from limitations: difficulty in long-term measurement; bulky multi-electrode helmets/headsets causing discomfort and noise; lack of wireless support and connector-induced noise; reliance on constrained monitor-based experiments rather than autonomous machines; and predominantly theoretical closed-loop demonstrations. A practical BMI requires integrated electrodes, connectors, wireless systems, optimized geometries, fixation methods, and closed-loop configurations. To address this, the authors introduce a wireless, neuroadaptable Brain–AI Closed-Loop System (BACLoS) based on an earbud-like wireless EEG device (e-EEG) with tattoo-like electrodes and connectors. BACLoS revises and reinforces AI decisions using ErP signals elicited by undesired AI behavior. The system features dry, electrolyte-free tattoo electrodes for long-term, high-quality EEG, a lightweight single-channel e-EEG earbud that conforms to the head for comfort, and a closed-loop framework wherein deep learning detects ErP from wirelessly streamed EEG to trigger emergency interruption or reinforcement learning in AI-controlled machines.

Tattoo-like electrode and interconnector fabrication: Temporary tattoo paper was used as the base. A 300 nm polymer layer was deposited by high-vacuum CVD. A 5 nm Cr adhesion layer and 100 nm Au were thermally evaporated through a serpentine open-mesh shadow mask. Tattoo paper was cut to desired shapes. Transferred electrode thickness on skin was ~900 nm. Tattoo-like connectors were fabricated using thermal evaporation and reactive ion etching with a PI (1.2 μm)/Au (100 nm)/PI (1.2 μm) sandwich to place metal at the neutral mechanical plane; total on-skin thickness was 2.8 μm. An anisotropic conductive film (ACF) cable bonded the tattoo-like connector to a flexible printed circuit (FPC) connector (assembly at >150 °C and 30–40 kg·cm−2). The conductive area of the ACF cable was 30 μm thick and 250 μm wide. FPC connector thickness varied 80–300 μm. The interconnect achieved a step-thickness gradient from ultra-thin electrodes to thicker rigid connectors for stability and reduced motion artifacts.

Wireless EEG earbud preparation (e-EEG/e-EEGd): A four-layer PCB hosted an electrobioimpedance amplifier (RHD2216, INTAN), 8-bit microcontroller (Atmega328P-MNR), BLE module (BOK-NL1E52), FPC connector (MESA-115105-0C), linear regulator (TLV70330DQCR), decoupling capacitors, and passives. A 3D-printed two-part case (polyethylene resin) conformed to PCB and battery. Firmware was developed with Atmel Studio and Arduino IDE. Sampling rate: 102 Hz; ADC resolution: 16 bit; differential gain: 20. Continuous wireless EEG streaming for up to 8 h per charge. The complete e-EEGd was lightweight (reported 8.24 g in device overview) and designed to fit in the ear, with electrodes positioned at forehead (reference), temple (ground), and mastoid (recording) or as described for specific experiments.

Autonomous RC car fabrication: Components mounted to an acrylic frame using breadboards and jumpers. Control included Arduino Nano 33 BLE (AI-embedded), Arduino Uno R3, motor driver (L9110S), BLE module, microSD socket and card, microphone, reflective photointerrupters, sensors, batteries. The frame was 3D-printed (nylon 12). The car followed a black line via photointerrupters, stopped at a stop line, and communicated timing cues to synchronize with EEG recordings. A face/voice recognition “black box” was included for user detection and steering commands.

Skin–electrode impedance measurement: Two tattoo-like electrodes (10 cm apart) were used (one working/cathode, one reference/anode). Impedance measured by single-channel potentiostat (Palmsens) from 1–1000 Hz every 30 min for 10 h.

Continuous EEG while moving: Two volunteers wore e-EEG with electrodes at forehead (working), temple (ground), and mastoid (reference). The earbud was connected to Arduino Nano BLE and a computer for data logging. Motions included sitting, standing, walking, cycling, scooter riding, and driving. Eye-open/closed conditions were also tested. A comparison setup used commercial electrodes (Zant M), a commercial device (RZ467, MicroElectronik), and an INTAN RHD2216 Arduino shield as c-EEG. Signal processing used MATLAB/BrainStorm with FIR bandpass (8–12 Hz) and Morlet wavelets (5–15 Hz) to analyze alpha rhythms; RMS and FFT characterized noise.

ERP acquisition and analysis: ERP data were recorded for 1 s following each stimulus. Preprocessing used bandpass filters (1–30 Hz and 1–8 Hz). P300 peak amplitudes and SNRs were computed (peak amplitude divided by baseline RMS). FFT assessed dominant P300 frequency content.

Auditory oddball P300 (tests 1–3): e-EEG and c-EEG used simultaneously with commercial electrodes placed adjacent to tattoo electrodes. Stimulus duration 1.0 s; inter-stimulus interval 9.0 s; target probability 10%. Subjects counted target stimuli. Test 1: high-pitched tone (250 Hz) as target vs low-pitched tone as non-target. Test 2: human voice “no” as target vs “yes” as non-target. Test 3: “no” as target vs silence as non-target. Wireless stimulus delivery and EEG logging used Arduino UNO R3, BLE, MP3 module, speaker, with continuous SD logging and memory management.

ERP with autonomous RC car: The RC car followed a track and was programmed to violate stop-line behavior with set probabilities (2%, 5%, 10%, 20%, 50%). At stop-line detection, the car sent a synchronization cue; EEG recorded 1 s from the cue and returned data to the car for labeling. ErrP was evaluated following expected (stop) vs unexpected (violate) behaviors.

ERP with assistive interface: An assistant interface responded to a phone-call request with a 10% malfunction probability. On request, a cue was sent to e-EEG, which recorded 1 s and streamed data back in real time for classification.

Machine learning classification: From RC-car ERP experiments, 295 cases were used; class imbalance (more non-targets) was balanced for training. Classifiers: logistic regression (LBFGS, L2 penalty), LDA (SVD fit), k-NN (k=5, Minkowski distance), random forest (128 trees, Gini), SVM (libsvm, C=1, linear kernel with degree three specification). Input dimensionality (window size and start) was optimized with repeated training and 5-fold CV; performance measured by accuracy and AUC.

Deep learning classification: DNN and LSTM models (Keras/TensorFlow) were trained on single-channel EEG preprocessed by FIR bandpass (1–8 Hz) and segmented into 0.3 s input dimensions. DNN: two dense layers (8 units, ReLU) plus sigmoid output. LSTM: one LSTM layer (30 input units) plus sigmoid output. Five-fold CV assessed accuracy; batch size tuned via grid search. Reported best performance used an LSTM with 30 hidden neurons with input windows aligning to 0.5–0.335 s post-stimulus.

Real-time deep learning classification deployment: Pretrained models were converted to TensorFlow Lite (no quantization), encoded as Arduino headers, and deployed on Arduino Nano 33 BLE. Real-time pipeline: receive EEG from e-EEG, FIR bandpass 1–8 Hz, parse 0.3 s windows, infer with DNN/LSTM, and output binary presence/absence of ErrP.

Closed-loop demonstrations (EIS and UCRS): An emergency interrupting system (EIS) stops/modifies AI actions if ErrP is detected shortly after an AI decision, and a user-customized reinforcement system (UCRS) uses ErrP presence as punishment and absence as reward to reinforce user-preferred actions. Two microcontrollers in the RC car shared cues and classification outputs via digital I/O to control immediate behavior (e.g., emergency braking, route choice at forks). Reinforcement updated internal route preferences based on user feedback.

Maze solver demonstration: A maze interface (Processing) interfaced with Arduino Nano 33 BLE. Initially using the right-hand rule, the solver sent cues when choosing directions; detected ErrP signaled inefficient prior action, prompting reversal and reinforcement toward the shortest path after repeated trials.

Assistive interface demonstration: A deep-learning-enabled interface (Arduino Nano 33 BLE, auxiliary Arduino, BLE modules) generated audiovisual prompts, received real-time EEG, classified ErrP, and reinforced toward correct responses over repeated interactions.

Ethics: All human measurements were approved by the IRB of Sungkyunkwan University (ISKU 2019-06-011), with written informed consent.

- Motion artifact reduction with tattoo-like electrodes/connectors: During sitting, RMS EEG was 11.6 µV (e-EEG) vs 11.8 µV (c-EEG). During walking, RMS was 18.57 µV (e-EEG) vs 45.49 µV (c-EEG). Across four real-life activities (walking, biking, scooter, driving), average RMS was 23.10 µV (e-EEG) vs 124.23 µV (c-EEG).

- Frequency-domain analysis during walking showed that replacing long dangling connectors with tattoo-like connectors reduced 0–2 Hz indirect motion noise; using both tattoo-like connectors and electrodes significantly reduced 2–4 Hz direct motion artifacts.

- Alpha rhythms were measurable with e-EEG under noisy walking conditions. Eye-blink artifacts were identifiable in both systems but had distinctive shapes from P300/ErrP.

- P300 measurements (n=10 subjects; N=360 recordings) in auditory oddball tasks showed P300 peaks after unexpected stimuli at average latencies of 392 ms (test 1), 414 ms (test 2), and 373 ms (test 3). Dominant P300 frequency content overlapped with <48 Hz bands, emphasizing susceptibility to motion artifacts; e-EEG maintained measurement robustness under motion where c-EEG struggled.

- ErrP with autonomous RC car: Violation probabilities (2–50%) modulated P300/Pe amplitude; rarer violations elicited larger peaks. ErrP components included an early Err peak (50–100 ms), N200/FRN (~200 ms), and P300/Pe (~300 ms). When observing a moving RC car, ErrP patterns were difficult to observe with e-EEG due to driving-induced noise (~50 ms), whereas c-EEG reportedly showed clearer patterns under that specific condition.

- Deep learning classification: LSTM achieved highest single-trial classification accuracy of 83.81% with AUC 0.849 using a 0.5–0.335 s window and 30 hidden units. DNN and traditional ML (LR, LDA, RF, SVM) underperformed relative to LSTM. As the probability context changed, classification accuracy varied: when the chance of unexpected behavior rose to 29%, accuracy reached 100% (LSTM) and 92% (DNN); with 50% stopping probability, accuracy was 81% (LSTM) and 77% (DNN).

- Real-time EIS: Average reaction time using ErrP feedback was 0.35 s, 0.13 s faster than physical reaction time; at 110 km/h, this corresponds to ~4 m shorter braking distance. Overall response time from ErrP feedback was 1.8× smaller than hand-triggered response; ErrP response distribution peaked at ~350 ms with less variability than physical responses.

- UCRS reinforcement: In a three-fork track, initial route choices were ~50:50; after reinforcement from user ErrP feedback, the RC car selected user-preferred routes with high probability. DNN classification accuracy improved with trial averaging: 92.9 ± 3.5% (3 overlaps) and 98.57 ± 1.7% (5 overlaps).

- Maze solver: Providing ErrP feedback to a right-hand-rule solver reduced completion time from 36.47 s to 14.12 s; after learning the shortest path, completion time was 13.37 s.

The study demonstrates an integrated Brain–AI Closed-Loop System that combines ultra-conformal tattoo-like electrodes/connectors with a wireless earbud EEG to obtain higher-fidelity signals in daily-life motion conditions. By leveraging deep learning to detect ErrP in single-channel dry-electrode recordings, the system can inform AI agents in real time to interrupt unsafe actions or reinforce user-preferred behaviors. Quantitative reductions in motion artifacts and successful P300/ErrP detection support the feasibility of implicit, low-effort human-in-the-loop feedback for autonomous machines. The EIS results indicate faster reaction times than manual control, and the UCRS and maze-solver experiments show rapid adaptation to user preferences and efficient pathfinding. The platform suggests a practical route toward real-world BMI/HMI applications, where continuous biosignal collection can inform personalization and safety in autonomous systems. Future enhancements may include improved soft conductive materials or hydrogels for electrodes, higher-resolution low-noise amplifiers, and large-scale trials with real vehicles and complex environments to validate generalizability and robustness.

This work introduces BACLoS, a practical closed-loop human–AI platform using a lightweight earbud EEG and tattoo-like electrodes/connectors to capture ErrP signals and guide autonomous machine decision-making. The system reduces motion artifacts, enables reliable P300/ErrP detection in realistic settings, and supports deep-learning-based real-time classification to implement emergency interruption and user-driven reinforcement. Demonstrations with an RC car, assistive interface, and maze solver validate faster responses, improved decision accuracy, and reduced task time. Future research should focus on enhanced electrode materials, higher-performance amplifiers, expanded multi-channel configurations, and deployment in complex real-world scenarios (e.g., road tests with self-driving cars) to further improve accuracy, robustness, and generalizability.

- Single-channel, dry-electrode setup limits spatial information; performance may benefit from multi-channel recordings.

- While motion artifacts are significantly reduced, certain dynamic conditions (e.g., observing a moving RC car while driving) still posed challenges for ErrP detection with the e-EEG, as noted in specific tests.

- Classification accuracy for single-trial ErrP detection (~84% best case) leaves room for improvement; averaging across trials improves accuracy but increases latency.

- Small sample sizes in some experiments (e.g., two volunteers for motion tests; ten for P300) limit statistical power and generalizability.

- Device sampling rate (102 Hz) and 16-bit resolution, while adequate for ERPs, could constrain some analyses; authors suggest higher resolution/lower-noise amplifiers.

- Closed-loop demonstrations were conducted on RC platforms and simulated interfaces; translation to full-scale autonomous vehicles and broader assistive contexts requires further validation.

Related Publications

Explore these studies to deepen your understanding of the subject.