Political Science

The role of bot squads in the political propaganda on Twitter

G. Caldarelli, R. D. Nicola, et al.

This research dives into the impactful role of social bots in shaping political propaganda on Twitter, particularly around the migration discourse from North Africa to Italy. Conducted by experts including Guido Caldarelli and Rocco De Nicola, the study unveils how bots significantly amplify mainstream narratives, revealing intriguing patterns in their operations among political accounts.

Playback language: English

Introduction

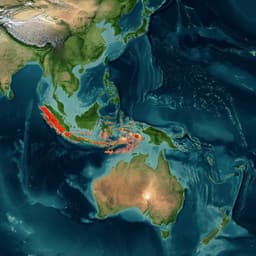

The proliferation of social media has transformed how news spreads, information is exchanged, and facts are checked. However, the rise of automated accounts, known as social bots, presents a significant challenge to the integrity of online discourse. These bots can be programmed to automatically post information, amplify messages, and even manipulate public opinion. While bots can be used for beneficial purposes (e.g., providing emergency assistance), their potential for malicious use, particularly in political contexts, is a growing concern. Recent studies have linked social bots to the manipulation of online discussions during major political events, including the 2016 US presidential election and the UK Brexit referendum. These incidents underscore the urgency of understanding how bots operate and their influence on information diffusion. This research focuses on Twitter, a prominent microblogging platform, to analyze the role of bots in political propaganda related to the migration flux from Northern Africa to Italy. The study period (January 23rd to February 22nd, 2019) was chosen due to intense political debate surrounding the landing of migrant rescue ships and the actions of the Italian government. The main objective is to quantify the impact of social bots on the dissemination of politically charged content and to identify any novel patterns of bot behavior.

Literature Review

Existing literature offers various methods for detecting social bots. These approaches often rely on analyzing account profiles, network structures, and posting characteristics. Supervised learning techniques, employing features extracted from account profiles, have shown promise in identifying bots with high accuracy. Network-based methods focus on identifying unusual patterns of interaction between accounts, while approaches based on posting characteristics analyze the content and timing of tweets to distinguish bots from human users. However, studies focused on detection often neglect bots' effective contribution to social networks and the influence of random noise in the system. Entropy-based null models, which are general and unbiased, offer a useful approach for filtering out irrelevant information. These models have been applied successfully in various domains, including the analysis of complex networks in finance and social media. Previous studies have utilized this approach to examine Twitter traffic during political campaigns, revealing the political affiliations of users and patterns of communication between different political groups. This study integrates a lightweight bot detection classifier with entropy-based null-model analysis to investigate the impact of social bots on the dissemination of information on Twitter.

Methodology

The research employs a multi-stage methodology combining bot detection, network analysis, and entropy-based null models.

1. **Data Collection:** Tweets related to the migration issue were collected using the Twitter public Filter API, based on keywords (see Table 2 in the paper). The dataset comprises 1,082,029 tweets posted by 127,275 unique accounts over one month.

2. **Bot Detection:** A slightly modified version of a supervised classification model from Cresci et al. (2015) was used to classify accounts as either human-operated or bots (see Table 3 for features). This lightweight classifier uses readily available account profile features to achieve high accuracy.

3. **Network Construction:** Two types of networks were constructed: (a) a bipartite network representing retweet interactions between verified and unverified accounts, and (b) a directed bipartite network representing tweet authorship and retweets.

4. **Entropy-Based Null Models:** The Bipartite Configuration Model (BiCM) and its directed variant (BiDCM) were used as null models for the bipartite networks to isolate significant interaction patterns. These models control for the degree sequence of the nodes, thus removing the effects of user activity and tweet virality.

5. **Network Projection:** The validated projection method from Saracco et al. (2017) was employed to project the bipartite networks onto a monopartite network of accounts. This projection method identifies statistically significant connections between accounts based on their common neighbors, discarding connections that are likely due to random chance given the user's activity.

6. **Community Detection:** The Louvain algorithm was used to detect communities in the projected networks, providing insight into user polarization and the identification of politically homogeneous groups.

7. **Hub Identification:** The Hubs-Authorities algorithm was used to identify influential accounts ('hubs') within the validated retweet network. This analysis focuses on the 'hubs' due to their impact on discussions.

8. **Polarization Index:** The polarization index from Bessi et al (2016) was used to determine the political orientation of unverified accounts based on their interaction with verified accounts. A label propagation algorithm was used to extend this assessment to unverified users that did not interact directly with verified users.

The study then analyzes the overlap of bots among the followers of influential accounts and identifies 'bot squads'.

Key Findings

The study yielded several significant findings:

1. **Significant Bot Presence:** After filtering out random noise using entropy-based null models, the analysis revealed a considerable presence of bots among the followers of influential accounts ('hubs'). These bots significantly contribute to the spread of information, particularly from these hubs.

2. **Bot Squads:** The research discovered the existence of 'bot squads' – groups of bots that simultaneously follow and retweet messages from multiple hubs. This coordinated behavior significantly enhances the reach and visibility of politically charged messages, especially among right-wing political accounts.

3. **Polarization:** Users in the validated network were highly polarized, predominantly interacting with accounts from a single community. The polarization index and label propagation procedure effectively assigned political affiliations, with a clear disparity in polarization between human users and bots.

4. **Hub Dominance:** The most influential hubs ('highest hub score') in the validated retweet network were predominantly associated with the right-wing political spectrum. Notable figures like Matteo Salvini (leader of the Lega party) held a leading position in the network. Accounts from opposing political viewpoints received significantly lower hub scores.

5. **Source of Bot Content:** A significant portion of the original tweets created by the bots in the largest bot squad consisted of links. Overwhelmingly, the majority of these links led to voxnews.info, a website identified by several fact-checking sites as a source of misinformation. This illustrates a coordinated attempt to spread disinformation via bot activity.

6. **Limited Bot Impact in Validated Network:** The percentage of bots in the validated network was considerably lower than in the original network, suggesting that the bulk of bot activity does not significantly contribute to the spread of significant, non-random information in the political discussions around migration.

Discussion

The findings highlight the strategic deployment of bots to amplify specific political narratives. The discovery of 'bot squads' signifies a new dimension to bot behavior, demonstrating organized coordination to promote messages from multiple influential accounts. This coordinated activity allows a small set of accounts to amplify their messages disproportionately across the network and reach a larger audience, suggesting a shift beyond simple amplification of individual accounts and an increased sophistication in coordinated information operations. The observed polarization in the validated network further underscores the tendency of users to primarily engage with accounts sharing similar political viewpoints. The results strongly suggest that this effect is intensified by the presence of bots, who further isolate these echo chambers and reinforce political divides. The fact that the most influential accounts and those with the largest bot following are largely from the right-wing political spectrum warrants further investigation into the potential systemic bias in the identified network. The concentration of bot activity toward certain political viewpoints suggests a strategic effort to manipulate online discourse, particularly around politically sensitive topics. The study's findings add to the growing body of evidence demonstrating the significant impact of bots on online political discourse and highlight the need for more effective methods to detect and mitigate the influence of bot-driven propaganda.

Conclusion

This study demonstrates that social bots are not merely amplifying individual accounts but are actively engaged in coordinated campaigns ('bot squads') to promote specific political viewpoints. The identification of these squads and the source of the misinformation they disseminate (voxnews.info) provides critical insights into the strategies employed for online political manipulation. Further research should focus on the evolution of bot strategies, the development of more robust bot detection techniques, and the societal implications of these manipulative tactics. The effectiveness of the entropy-based null model in isolating significant communication patterns underscores the potential of such methods in analyzing complex social networks.

Limitations

The study focuses on a specific time period and topic (migration from North Africa to Italy), limiting the generalizability of the findings to other contexts. The accuracy of the bot detection classifier is dependent on the quality of the training data and the evolving nature of bot technology. While the entropy-based null model effectively filters out random noise, it's essential to acknowledge that other unmeasured factors might influence network structure and interaction patterns. Finally, the study relies on publicly available Twitter data, which may not represent the full extent of bot activity on the platform.

Related Publications

Explore these studies to deepen your understanding of the subject.