Computer Science

The Goldilocks paradigm: comparing classical machine learning, large language models, and few-shot learning for drug discovery applications

S. H. Snyder, P. A. Vignaux, et al.

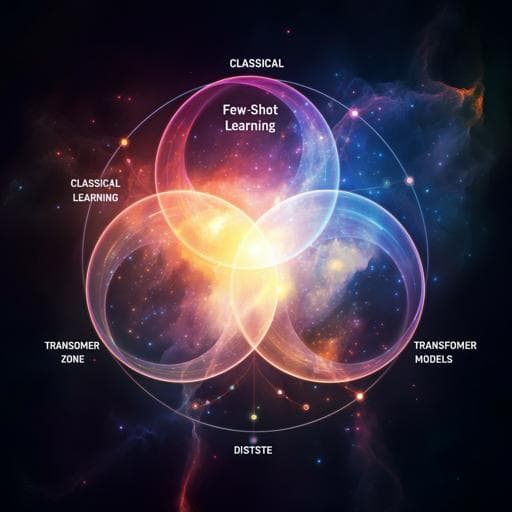

Machine learning is widely used in early drug discovery to predict target activity and ADME/Tox properties and to enable virtual screening. Classical ligand-based approaches (e.g., QSAR/QSPR with fingerprints and physicochemical descriptors) often require substantial data to perform well, limiting utility on targets with few known compounds. Transformer-based LLMs applied to SMILES and sequence models promise transfer learning advantages but typically require large pretraining and may struggle when downstream datasets are small. Few- and zero-shot methods have emerged to handle micro-data settings. Despite this, there have been few systematic comparisons across algorithms as a function of dataset size and chemical diversity. The study asks: given a new dataset, how should one choose between classical ML, pre-trained transformers, and few-shot learning? The purpose is to define a data-driven heuristic (the Goldilocks paradigm) that maps dataset size and diversity to the most appropriate modeling approach, and to validate it across thousands of targets and in a practical kinase inhibitor discovery task relevant to Alzheimer’s disease.

Prior work established strong performance of classical ML (e.g., RF, SVR, NB, KNN, DNN) on QSAR/QSPR tasks with sufficient data and appropriate descriptors (ECFP6, MACCS, RDKit, Mordred). Transfer learning and multitask models, including RNN/LSTM and transformer architectures using SMILES, have improved predictions when large pretraining corpora are available. However, drug discovery datasets for single targets are often small (tens of compounds), limiting transformer fine-tuning without transfer. Few-shot and zero-shot learning have shown state-of-the-art performance in low-data settings in vision and language and are being adapted to cheminformatics. Large-scale benchmarking across thousands of datasets has compared classical algorithms and deep learning, showing comparable or incremental advantages depending on context, but direct comparisons across dataset size and diversity tiers, especially including pre-trained transformers and FSLC, have been limited. This work situates itself within this gap, leveraging transfer learning (MolBART), classical SVR/SVC with ECFP6, and prototypical-network FSLC to systematically assess performance across data regimes.

Overview: The study compares three modeling paradigms: classical ML (SVR/SVC with ECFP6), a pre-trained transformer (MolBART) fine-tuned per endpoint, and few-shot learning classifiers (FSLC) using a prototypical network with episodic training. Performance is analyzed as a function of dataset size and chemical diversity, with a diversity metric derived from the area under the cumulative scaffold frequency plot (CSFP) based on Bemis–Murcko scaffolds computed with RDKit. A FIGS decision-tree classifier is then trained to predict the best algorithm given dataset size and diversity. A practical validation on kinase targets (GSK3B, ABL1, FYN, CDK5, MARK1) includes prospective screening for MARK1. Classical ML (SVR/SVC): Using Assay Central, SVR models were trained for regression across 2401 ChEMBL single-target endpoint datasets (Ki, IC50, EC50), after removing datasets with fewer than 20 datapoints. Standardization included E-Clean and RDKit processing; activities were converted to −log[M], duplicates averaged by InChIKey. ECFP6 fingerprints and other descriptors were used; nested 5-fold cross-validation was applied for hyperparameter selection and internal validation. SVC models were used for classification comparisons against FSLC in the kinase few-shot benchmarking using identical support/query sizes. Transformer (MolBART): The Chemformer molBART (Bidirectional Auto-Regressive Transformer with encoder-decoder) pre-trained model was fine-tuned for each of the 2401 endpoints using PyTorch Lightning for 150 epochs. Fine-tuning used the aggregated dataset split 70/5/25 into train/validation/test. Analyses examined dependence of R² on training set size and diversity, and comparisons to SVR per target. Diversity metric: For each dataset, Bemis–Murcko scaffolds were computed. Diversity was defined from the CSFP as div = 2(1 − AUC), where AUC is the area under the CSFP curve. A perfect diversity score with all unique scaffolds gives div = 1; a single-scaffold dataset gives div = 0. Few-shot learning classifier (FSLC): 371 kinase datasets from ChEMBL were curated; those with <20 actives or <20 inactives were removed, leaving 95 kinases. Data were binarized at 100 nM (−log[M] ≥ 7). Splits: 70/15/15 train/validation/test by kinase. The FSLC uses a two-stage embedding (either FNN for ECFP 2048-bit, radius 5, or GCN for graph embeddings) followed by an IterRefLSTM for iterative refinement and a prototypical network for distance-based classification. Episodic training sampled support (S) and query (B) sets per task with various shots (e.g., 10-10, 5-10, 1-10, 5-5, 1-5, 1-1 actives/inactives). Loss: negative log-likelihood; optimizer: Adam; training until loss ≤ 1e−6. Evaluation used PR AUC and repeated 1000 random samplings per test task; additional metrics reported include ROC AUC, average precision, F1, Cohen’s kappa (CK), and MCC. SVC baselines were trained on identical support/query compositions. FIGS decision model: Fast Interpretable Greedy-Tree Sums (hyperparameter: number of trees = 3) was trained to predict the best-performing algorithm (MolBART, SVM, FSLC encoded as 0/1/2) using only dataset size and diversity as inputs. Performance was measured on a held-out test set. Kinase case study and prospective MARK1 screen: Classification and regression models were built for GSK3B, ABL1, FYN, CDK5, and MARK1 using ChEMBL (training) and BindingDB (external tests when available). Activity thresholds: 100 nM for GSK3B and ABL1; 1 μM for FYN, CDK5, MARK1. Dataset sizes and diversity were recorded. For MARK1 (only 18 training compounds; 5 actives/13 inactives; no external test), a prospective screen of the MedChemExpress FDA-Approved & Pharmacopeial Drug Library (2637 compounds) was conducted using Promega ADP-Glo assay at 385 μM. Hits were followed up by Z′-LYTE IC50 determination. Predictions from SVR/SVC, fine-tuned MolBART, and pre-trained kinase FSLC were compared against experimental outcomes. Similarity analyses (MACCS Tanimoto) and t-SNE visualizations characterized chemical space coverage.

- Transformer vs. classical regression across 2401 endpoints: MolBART’s test R² was largely independent of training set size (correlation ~0.068 with number of endpoints) and diversity (correlation 0.13), while SVR R² increased with more data and decreased with higher diversity. For small-to-medium and diverse datasets, MolBART often outperformed SVR; as datasets grew large, SVR surpassed MolBART.

- Diversity effects: Increasing molecular diversity (more unique Murcko scaffolds; higher div) negatively impacted SVR R² but not MolBART R². The difference MolBART R² − SVR R² correlated positively with diversity and negatively with test set size, revealing a data-diversity ‘sweet spot’ favoring MolBART under high diversity and low-to-moderate sizes (<~240 datapoints).

- Few-shot learning under micro-data: FSLC maintained reasonable performance down to extreme micro-sets (e.g., 1 active/1 inactive) with mean ROC AUC around 0.64–0.68 and F1 around 0.56–0.62 across validation tasks. By contrast, SVC largely failed to learn under 1–10 or 1–5 shots (e.g., ROC AUC ~0.503, F1 ~0.01–0.02), and began to converge only near 10–10 shots. Thus, FSLC dominates in datasets <~20 datapoints.

- Heuristic classifier (FIGS): Using only dataset size and diversity, FIGS predicted the winning model class with ROC 0.74, precision 0.77, recall 0.63, accuracy 0.75, specificity 0.84, MCC 0.49 on held-out kinases, supporting the Goldilocks heuristic.

- Kinase case study (AD-relevant): Training set sizes/diversities: GSK3B (2969, div 0.52), ABL1 (1791, 0.52), FYN (1070, 0.74), CDK5 (309, 0.61), MARK1 (18, n/a). External test sets were available for all except MARK1. Prospective MARK1 screening identified five previously unreported inhibitors (baricitinib, AT9283, ON123300, upadacitinib, tofacitinib). On the MARK1 prospective test, SVC predicted no actives (TP=0), MolBART identified 3/5 actives (precision 0.6), and FSLC identified all 5 actives (precision 1.0). Novel hits showed moderate MACCS similarities to training (max 0.79; most 0.56–0.75), and t-SNE indicated exploration beyond training chemical space. Goldilocks heuristic summary: FSLC excels for very small datasets (<50 molecules, especially <20); pre-trained transformers (MolBART) excel for 50–240 molecules, particularly with high diversity; classical models (e.g., SVR/SVC) excel when datasets exceed ~240 molecules.

The work addresses the central practical question of model selection in drug discovery by showing that performance depends jointly on dataset size and scaffold diversity. MolBART’s transfer learning capability confers robustness to high diversity and limited data, whereas classical SVR/SVC models scale with dataset size but degrade with increasing diversity. FSLC uniquely addresses micro-data regimes by leveraging task-similar pretraining and prototypical representations. The FIGS model demonstrates that simple, interpretable features (size and diversity) suffice to predict the best-performing approach, indicating a generalizable relationship. Application to kinase inhibitors associated with Alzheimer’s disease validates the heuristic: classical models and MolBART work well for larger datasets and external tests, while FSLC discovers multiple novel inhibitors in a sparse, low-data MARK1 scenario, including actives not present in training chemical space. These results guide practitioners to select algorithms likely to yield the best predictive and prospective performance based on readily computable dataset characteristics.

This study defines a practical Goldilocks paradigm for algorithm selection in ligand-based drug discovery: few-shot learning dominates for very small datasets (<50 molecules; especially <20 datapoints), pre-trained transformers such as MolBART dominate for small-to-medium diverse datasets (50–240 molecules), and classical algorithms (e.g., SVR/SVC with ECFP6) dominate for larger datasets (>240 molecules). A simple, interpretable FIGS model using only dataset size and diversity can predict the best approach (ROC ~0.74). Prospective validation on MARK1 identified five novel inhibitors, confirming FSLC’s advantage in sparse data and MolBART’s utility even when trained on very small sets. Future work should test the heuristic across broader target classes beyond kinases, incorporate additional molecular representations and 3D/structure-based features, expand data sources, and evaluate more model families to refine and generalize the selection framework.

The study focuses on kinases, which may have higher inter-target similarity and richer data than many other target classes, potentially biasing conclusions. Only ligand-based 2D descriptors (e.g., ECFP6, MACCS) and SMILES-based transformer encodings were explored; no 3D structure-based or physics-informed features were included. Public datasets were limited to ChEMBL and BindingDB; other sources could alter results. Data curation aggregates heterogeneous assay conditions and may include experimental noise and dataset composition bias. The comparative analysis centered on SVR/SVC, MolBART, and a specific FSLC architecture; other classical or deep approaches (e.g., GNNs, multitask models) could perform differently.

Related Publications

Explore these studies to deepen your understanding of the subject.