Computer Science

The Goldilocks paradigm: comparing classical machine learning, large language models, and few-shot learning for drug discovery applications

S. H. Snyder, P. A. Vignaux, et al.

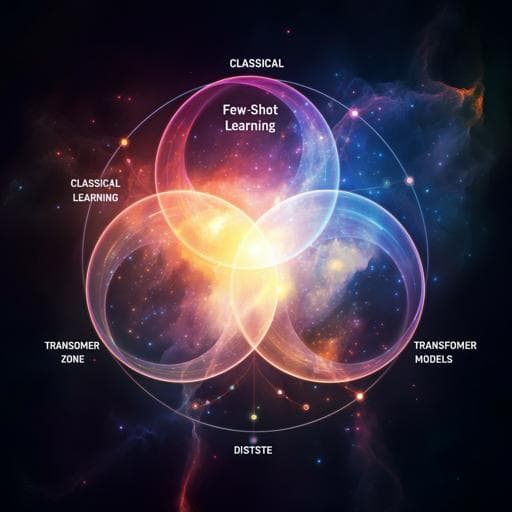

This innovative research conducted by Scott H. Snyder, Patricia A. Vignaux, Mustafa Kemal Ozalp, Jacob Gerlach, Ana C. Puhl, Thomas R. Lane, John Corbett, Fabio Urbina, and Sean Ekins examines the optimal performance of machine learning models in drug discovery. Discover how dataset size and diversity create a 'Goldilocks zone' for SVR, FSLC, and transformer models.

Related Publications

Explore these studies to deepen your understanding of the subject.