Medicine and Health

Reliable and easy-to-use calculating tool for the Nail Psoriasis Severity Index using deep learning

H. Horikawa, K. Tanese, et al.

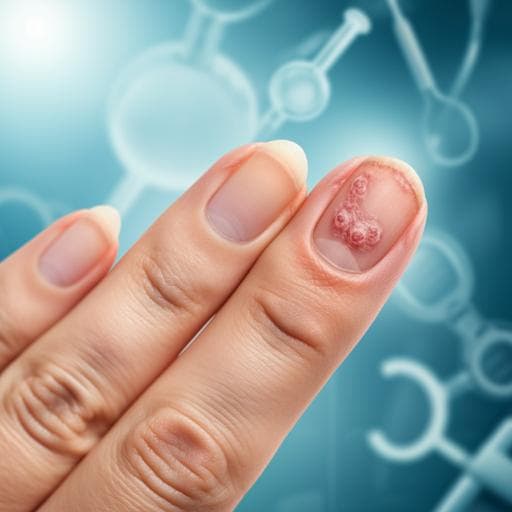

Psoriasis is a common inflammatory skin disease, and 10–80% of patients have nail involvement that causes cosmetic concerns, functional limitations, and reduced quality of life, and is a risk factor for psoriatic arthritis. The Nail Psoriasis Severity Index (NAPSI) is a widely used tool that assesses nail matrix and nail bed findings across four quadrants per nail, producing a score from 0 to 8. Despite its validation, NAPSI’s utility is limited by interobserver variability and the effort required to learn and consistently apply the scoring criteria, which are partly subjective. Prior reports show wide variability in dermatologists’ scores even on the same images. This study seeks to address these issues by developing an easy-to-use deep learning-based tool—the "NAPSI calculator"—that reliably detects nails in images and accurately scores NAPSI, aiming to improve reproducibility and clinical usability.

Deep learning has shown strong performance in various medical imaging tasks, including dermatologist-level skin cancer classification and differential diagnosis of diverse skin diseases. For NAPSI-specific automation, four prior studies used different approaches but retained limitations: (1) a system achieving high accuracy for recognizing individual nail psoriasis findings required dedicated imaging of each nail and evaluated final NAPSI against one dermatologist on only 10 images; (2) a model dividing nails into quadrants aligned with NAPSI had insufficient lesion detection performance and did not evaluate final NAPSI; (3) a key-point detection approach for modified NAPSI (mNAPSI) required specific photography, lacked validation of robustness to hand shape variation, and used skewed data with ~80% scores of 0–1 (max observed 6 of 14); (4) eight separate object detection models to identify each NAPSI finding required pre-processing such as manual cropping, classification, and rotation based on hand shape. These gaps underscore the need for a practical, accurate, and fully automated NAPSI tool that operates on general clinical photographs without special equipment or extensive manual preparation.

Overall design: The pipeline comprises two sequential steps and a final integrated tool. Step 1 detects nails from hand/foot images. Step 2 predicts NAPSI for cropped nail images (separately for matrix and bed, combined to total). Step 3 integrates steps 1 and 2 as the "NAPSI calculator" and compares performance with clinicians. All code ran on Python 3.7.7 and PyTorch 1.10.2. Step 1 (Nail detection): Data consisted of 995 hand/foot images from Google image search (training/validation) and 881 hand/foot images from 78 patients with nail psoriasis at Keio University Hospital (test). Search terms included English and Japanese keywords for nails and nail diseases. Images were split into 700 training and 295 validation images; 881 patient images formed the test set. Annotations were bounding boxes for nail regions. Augmentations on training data included color normalization, flipping, rotation, and color perturbation. Model: SSD (Single Shot MultiBox Detector) with ImageNet-pretrained VGG16 backbone. Candidate rectangles were selected using Jaccard index (IoU threshold 0.5). Losses: Smooth L1 for box regression and cross-entropy for object classification (nail vs background). Hyperparameters: batch size 16, learning rate 0.001, momentum 0.9, weight decay 0.0005. Evaluation: mean average precision (mAP) (area under precision–recall curve). Ten independent trials with 500 training epochs each, re-splitting train/val and reinitializing weights. Step 2 (NAPSI scoring on cropped nails): From the 881 patient images, 3783 nail crops were extracted; toe nails and very low-resolution images (<250×250) were excluded, yielding 2939 fingernail images. Data were split without patient overlap into training, validation, and test (approx. 70%/15%/15% by repeated random splits), e.g., 2000/470/469 images. Training augmentations: resize to 256×256, 50% horizontal flip, up to 45° rotation, and standard ImageNet normalization; validation used resize and normalization only. Annotations: nine board-certified dermatologists (nail experts) provided nail matrix NAPSI (0–4), nail bed NAPSI (0–4), and total NAPSI (0–8). Models: two VGG16 (ImageNet-pretrained) classifiers for matrix and bed (5-class each); summed to total NAPSI (also treated as a 9-class severity classification). Loss: cross-entropy multiplied by the squared difference between predicted and annotated NAPSI to penalize larger errors more heavily. Hyperparameters: batch size 16, learning rate 0.0001, momentum 0.9. Evaluation: due to class imbalance, both micro and macro accuracies were used; predictions within ±1 point of annotation were considered accurate for class-wise accuracy. Ten independent constructions with 200 epochs, re-splitting train/val and reinitializing weights; mean accuracies and 95% CIs reported. Step 3 (Integrated "NAPSI calculator"): Data: 29 images (27 hands, 2 fingers) from 12 patients with nail psoriasis (June 2020–November 2021), totaling 138 nails. These patients were not part of steps 1–2 train/val sets. Annotations mirrored step 2 (nine nail expert dermatologists). Integration: the best-performing nail detector from step 1 and the 10 trained step-2 models were combined to produce 10 calculators. Comparators: six non-board-certified dermatology residents and four board-certified dermatologists (non-nail experts) independently scored NAPSI. Statistics: mean micro accuracy of the calculator vs residents compared by Welch’s t-test (p<0.05 significant). Additional analyses: Grad-CAM visualization to assess image regions influencing predictions; runtime measured on a laptop with Intel Core i7 (quad-core) and 16 GB RAM (approx. 0.85 s per hand image for detection; 0.95 s per nail image for scoring). Ethics: approved by Keio University School of Medicine Ethics Committee (#20150326) with opt-out consent.

Step 1 (detection): Mean mAP 93.8% with minimal variability across 10 trials (95% CI, 93.4–93.9%). Nails partially out of frame were detected; very small nails (e.g., little toe) were sometimes missed. Step 2 (scoring cropped nails): Micro average accuracy 82.8% (95% CI, 81.7–83.9%). Error distribution: 0-point error 43.1% (95% CI, 42.1–44.1%); 1-point error 39.6% (95% CI, 38.3–40.9%); 2-point error 12.4% (95% CI, 11.3–13.5%); >2-point error 4.8% (95% CI, 4.1–5.6%). Macro average accuracy within ±1 point was 82.7% (95% CI, 81.6–83.8%). Predictions concentrated within ±1 point across all annotated NAPSI scores. Step 3 (integrated tool): Nail detection accuracy 99.3% (137/138 nails). Mean micro accuracy across 10 calculators 83.9% (95% CI, 81.6–86.2%). Error distribution: 0-point error 47.5% (95% CI, 46.1–48.9%); 1-point error 36.4% (95% CI, 33.8–39.1%). Human comparators: residents 65.7% micro accuracy (95% CI, 54.5–76.9%), 0-point error 32.9% (95% CI, 26.0–39.7%), 1-point error 32.9% (95% CI, 28.2–37.5%); board-certified dermatologists (non-nail experts) 73.0% micro accuracy (95% CI, 66.8–79.2%), 0-point error 39.1% (95% CI, 33.9–44.4%), 1-point error 33.9% (95% CI, 28.9–38.9%). The calculator significantly outperformed residents (p=0.008) and board-certified dermatologists (p=0.005). Longitudinal case: the calculator tracked a patient’s NAPSI over time, correctly reflecting transient exacerbation and final improvement. Runtime: ~0.85 s for detection per hand image and ~0.95 s per nail for scoring on a standard laptop. Grad-CAM: often highlighted psoriasis-relevant regions but occasionally focused on non-finding areas, indicating incomplete feature learning.

The study addresses the key limitation of NAPSI—interobserver variability—by automating detection and scoring with a deep learning pipeline. Automatic nail detection removes manual cropping and likely reduces interference from non-nail regions, improving generalization. Treating NAPSI as a discrete severity classification and modifying the loss to penalize larger ordinal errors concentrated predictions within ±1 point across the range of severity. Compared with non-expert clinicians, the tool demonstrated significantly higher accuracy and a higher proportion of exact matches, supporting improved reliability and reproducibility. The system operates on routine clinical photographs without special equipment, making it accessible and practical for clinical workflows and potentially expanding NAPSI use beyond expert centers. The detector’s high performance is attributed in part to diverse web-sourced training images, enhancing robustness. Grad-CAM suggests the model often leverages relevant features, though further work is needed to ensure comprehensive learning of all NAPSI findings. Overall, the results indicate that deep learning can mitigate subjectivity in NAPSI scoring and enhance consistent disease monitoring, including longitudinal assessment.

This work introduces the "NAPSI calculator," a deep learning tool that automatically detects nails and accurately scores NAPSI from standard clinical photographs. It achieves high detection performance and NAPSI accuracy, significantly outperforming non-expert dermatologists, and operates efficiently on a standard laptop, supporting real-world clinical adoption. By reducing interobserver variability and effort required for scoring, the tool can enhance the utility of NAPSI in routine care and research. Future directions include improving performance on challenging cases with complex distributions of findings, expanding and balancing datasets to reduce sampling bias, enhancing interpretability and feature localization (e.g., fully learning all eight NAPSI findings), and potentially extending to toe nails and broader populations.

- A notable fraction (17.2%) of images had errors of two points or more, often representing challenging cases (e.g., centrally located but small-area findings; strong matrix changes obscuring bed assessment) that are also difficult for clinicians.

- Sampling bias: the dataset may over-represent severe cases from the authors’ institution, which could reduce generalizability and risk overfitting to local image characteristics.

- The model distinguishes matrix vs bed but does not explicitly identify each of the eight NAPSI findings; ideal scoring would precisely assess all findings.

- Grad-CAM analyses indicate the model may not fully learn all psoriasis features or may at times focus on absence of findings, leaving room for accuracy improvements.

- Toe nail images were excluded in step 2 due to deformation and scoring difficulty, potentially limiting applicability to toenails.

Related Publications

Explore these studies to deepen your understanding of the subject.