Engineering and Technology

Nanoprinted high-neuron-density optical linear perceptrons performing near-infrared inference on a CMOS chip

E. Goi, X. Chen, et al.

The paper addresses performing cryptographic decryption and general optical inference directly in the optical domain to avoid optical–electronic conversions and to exploit light’s inherent parallelism and speed. Traditional optical security approaches rely on optimised phase masks (e.g., Gerchberg–Saxton or wavefront matching) arranged in bulky multi-pass systems that are rigid and ill-suited to flexible authentication (e.g., biometric-like schemes). The authors propose compact, passive, single-layer holographic perceptrons trained via machine learning that can recognize either a specific key (symmetric decryption) or an entire class of keys (asymmetric decryption). They target near‑infrared operation and direct integration on CMOS imaging chips to enable unpowered, at-the-speed-of-light decryption and inference. To reach high capacity and compactness at NIR telecom wavelengths, they leverage nanolithographic fabrication—specifically galvo-dithered two-photon nanolithography (GD-TPN)—to realise high-neuron-density diffractive elements with precise axial control.

The literature background covers: (1) Optical encryption/decryption using phase masks designed by algorithms such as Gerchberg–Saxton or wavefront matching, which typically require multiple bulky optical elements and lack flexibility for multi-key authentication. (2) Optical implementations of matrix multiplication—fundamental to neural networks—using bulk optics (beam splitters, Mach–Zehnder interferometers) and integrated photonic circuits for optical signal processing and reconfigurable optical neural networks. (3) The emergence of diffractive neural networks, where diffractive elements implement learned linear transforms; prior fabrication methods have limited neuron densities, constraining compact NIR-capable devices. (4) Nanolithographic techniques as enablers for high-resolution, compact diffractive networks; among these, GD-TPN uniquely allows single-step, 3D free-form fabrication with lateral and axial resolution compatible with visible/NIR devices and printing on arbitrary substrates, including commercial CMOS sensors.

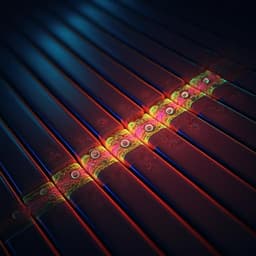

Design and training: The device is modelled as a transmission-mode diffractive neural network with three planes (input, MLD, output), each discretised into N×N pixels (neurons). Neurons between adjacent planes are coupled via Rayleigh–Sommerfeld diffraction. Input and output neurons are unbiased; each diffractive-layer neuron applies a learnable phase delay (bias) to the transmitted complex field. Training minimises a cross-entropy loss between the output intensity distribution and task-specific targets using backpropagation in TensorFlow. The design space includes neuron count, neuron pitch/diameter (density), and axial separations D1 (input–MLD) and D2 (MLD–output), all co-optimised via numerical sweeps. Compact multilayer perceptron (CMLP): To increase learning capacity without sacrificing compactness, adjoining diffractive layers are explored. Unlike well-separated multilayer diffractive networks (where added layers improve accuracy), adjoining compact layers can often be represented by a single effective linear transform. Nonetheless, a two-layer compact configuration shows improved accuracy over a single layer for the 9-class task under specific parameters (pixel diameter 419 nm; D1≈70.7 µm, D2≈31.4 µm). Tasks and datasets: Four machine-learning decryptors are trained. Symmetric: (i) MLD-T recognizes a single correct key among distractors (handwritten letters) and displays an acceptance tick; (ii) MLD-B acts as a secure display, outputting a butterfly image only with the correct key. Asymmetric: (iii) 3-MLD and (iv) 9-MLD map any input within their respective handwritten letter classes to designated output detector regions (class indicators). All designs target λ=785 nm to match photoresist transmission. Training/test dataset details are referenced in Methods/Supplementary. Fabrication (GD-TPN nanoprinting): Learned phase profiles are converted to relative height maps and 3D printed using galvo-dithered two-photon nanolithography in a hybrid zinc oxide photoresist. GD-TPN provides lateral control for neuron diameters in the 200–1000 nm range and axial control with ≈10 nm precision via galvo-dithering correction, an acousto-optic modulator, and a piezoelectric nanotranslation stage. For the reported devices, optimal neuron diameters are 413 nm (3-MLD) and 419 nm (9-MLD), yielding >500 million neurons/cm²; maximum achievable density with this platform is ~2.5 billion neurons/cm². AFM confirms printed pixel diameters and height modulations (≈1.48 µm for 3-MLD; ≈1.78 µm for 9-MLD) match the designs. Optical characterisation: Inputs are generated by a spatial light modulator illuminated by a 785 nm laser and relayed via two 4f systems to the MLD input plane. The MLD output plane is imaged by a lens system onto a CCD camera. Experimental classification accuracy is quantified by comparing measured outputs to numerical predictions for multiple test images per class. Diffraction efficiencies and noise tolerance are evaluated by adding controlled normalised noise to camera readouts and comparing with simulations.

- Fabricated passive, single-layer (and compact two-layer) holographic perceptrons that perform optical inference (symmetric and asymmetric decryption) at λ=785 nm, directly mappable to CMOS sensors.

- Achieved neuron densities over 500 million neurons/cm² (with printed pixel diameters 413–419 nm), with axial nanostepping precision of 10 nm. Platform supports up to ~2.5 billion neurons/cm².

- Compactness: Working distance as small as 62.8 µm enables on-chip integration; example design distances used include D1≈70.7 µm and D2≈31.4 µm for the compact two-layer study.

- Symmetric decryption: MLD-T and MLD-B experimentally achieve 100% accuracy over tested inputs, matching numerical expectations, demonstrating reliable key recognition and secure display functionality.

- Asymmetric decryption: 3-MLD and 9-MLD correctly route inputs to class-specific output regions. Experimental versus numerical accuracy match is 86.67% for 3-MLD and 80% for 9-MLD; the 9-MLD output appears grainier, consistent with sensitivity to noise and design constraints.

- Fabrication fidelity: AFM confirms intended pixel sizes (≈413–419 nm) and height modulations (≈1.48–1.78 µm), validating the GD-TPN process and accurate phase control.

- Design insights: Increasing neuron count/density and optimising axial separations significantly affect accuracy. A compact two-layer configuration can improve accuracy over a single layer, though adjoining layers may reduce to an effective single linear transform.

The work demonstrates that learned diffractive elements fabricated via GD-TPN can implement linear optical perceptrons capable of real-time, unpowered inference at the speed of light. By encoding learned phase biases into a high-density single diffractive layer, the devices can selectively decrypt either a single key (symmetric) or entire classes of keys (asymmetric), directly producing interpretable output patterns on a sensor. This addresses limitations of traditional phase-mask cryptosystems by enabling flexible authentication within a compact, single-pass element that is compatible with CMOS integration. The high neuron density and precise axial phase control are pivotal for NIR operation and compactness, translating to improved mapping capability and reduced working distances suitable for on-chip systems. Experimental results, including perfect symmetric-decryption performance and strong asymmetric-decryption accuracy (with quantified noise effects), validate the trained designs and the fabrication method. The approach is broadly applicable to other optical inference tasks (e.g., sensing, diagnostics), where linear transformations can provide powerful feature routing and classification when paired with appropriate encoding and detection strategies.

This study introduces nanoscale, GD-TPN-fabricated holographic perceptrons that perform optical inference and decryption at NIR wavelengths with exceptionally high neuron density and direct CMOS compatibility. The devices implement both symmetric and asymmetric decryption tasks with strong experimental performance, including 100% symmetric-decryption accuracy and robust class routing for multi-class cases, within compact working distances. The combination of machine-learned diffractive phase profiles, high-fidelity nanoprinting, and simple optical readout presents a scalable path toward low-power, ultrafast optical inference engines. Future work could explore: (i) expanded multilayer compact designs or hybrid separated–compact architectures to enhance capacity while maintaining small footprints; (ii) improved robustness to noise and aberrations through training with physical constraints and experimental priors; (iii) extension to broader wavelength bands and materials; (iv) tighter CMOS co-integration and arrays for parallel tasks; and (v) incorporation of nonlinearity (materials or detection schemes) to go beyond linear separability and further boost performance.

- Linear, shallow architectures (single-layer or compact adjoining layers) inherently limit learnable functions to linear separability; adjoining layers may collapse to an effective single linear transform, constraining capacity compared to well-separated multilayers.

- Accuracy for higher-class tasks (e.g., 9-MLD at 80%) is sensitive to noise and design parameters; output granularity indicates susceptibility to experimental noise and alignment.

- Performance depends critically on neuron density, pixel size, and axial distances (D1, D2); suboptimal choices degrade accuracy and efficiency.

- Devices are designed for a fixed wavelength (785 nm) matched to the photoresist; spectral bandwidth and off-design performance are not characterized here.

- While working distances are compact, full system-level integration considerations (packaging tolerances, thermal and environmental stability) are not detailed.

Related Publications

Explore these studies to deepen your understanding of the subject.