Interdisciplinary Studies

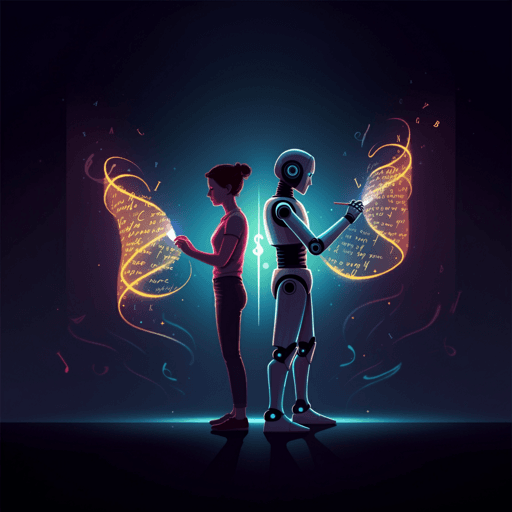

Establishing the importance of co-creation and self-efficacy in creative collaboration with artificial intelligence

J. Mcguire, D. D. Cremer, et al.

Across two experiments with advanced human–AI interfaces, the authors found people were most creative writing poetry on their own rather than editing AI‑generated drafts; however, the deficit vanished when people co‑created with AI, with creative self‑efficacy identified as a key mechanism. This research was conducted by Jack McGuire, David De Cremer, and Tim Van de Cruys.

Related Publications

Explore these studies to deepen your understanding of the subject.