Engineering and Technology

End-to-end differentiability and tensor processing unit computing to accelerate materials’ inverse design

H. Liu, Y. Liu, et al.

This research, conducted by Han Liu and colleagues, introduces a groundbreaking computational inverse design framework that overcomes challenges in numerical simulations by utilizing differentiable simulations on the TensorFlow platform. This innovation promises to expedite the process of designing optimal porous materials from sorption isotherm curves using advanced TPUs for scientific simulations.

~3 min • Beginner • English

Introduction

The study tackles the inverse design problem in materials science: finding a material configuration that yields a desired property (here, a targeted sorption isotherm) within a vast design space. Traditional simulations (e.g., DFT, MD) are powerful forward predictors but are limited for inverse design due to high computational cost and lack of differentiability, which hinders use of gradient-based optimization. Common workarounds train differentiable surrogate predictors and pair them with generators; however, co-training generator and predictor is difficult and extrapolation beyond the training set is limited by predictor accuracy. The research question is whether one can eliminate the surrogate by directly coupling a differentiable physics-based simulator with a generator and optimize the generator end-to-end via backpropagation. The work proposes and demonstrates such a pipeline using a differentiable lattice density functional theory (LDFT) sorption simulator implemented in TensorFlow and accelerated on TPUs, enabling direct training of a generator to produce porous matrices whose adsorption isotherms match arbitrary target curves.

Literature Review

Prior work in computational materials leverages DFT and MD for forward prediction and high-throughput screening. Inverse design approaches often rely on machine learning surrogates combined with generative models (autoencoders, GANs, inverse design networks) trained on datasets, optimized via backpropagation. Limitations include the need to train both generator and predictor, and constrained extrapolation due to surrogate inaccuracies. Recent advances in automatic differentiation and differentiable programming (TensorFlow, JAX, Taichi) have enabled differentiable simulations in domains like molecular dynamics and robotics, but applications to materials simulations remain underexplored. This study builds on these advances by reformulating an LDFT sorption simulator as a differentiable computation graph and coupling it directly to a generator, removing the surrogate predictor bottleneck.

Methodology

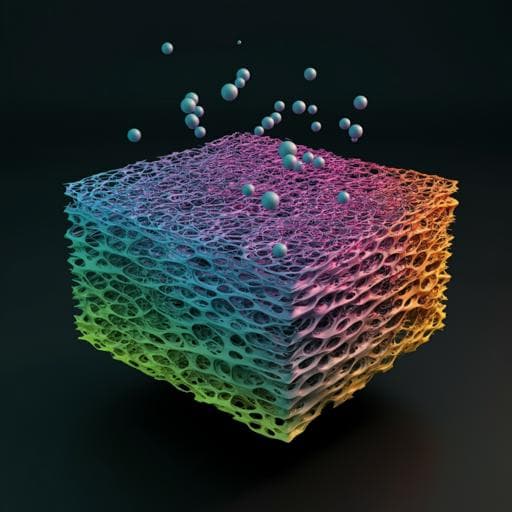

- Problem and model: Inverse design of porous matrices to match target water sorption isotherms (average pore water density versus RH). Porous media are represented on square 2D N×N or cubic 3D N×N×N lattices with binary solid/pore sites (η=0 solid, η=1 pore). Water density in pores p_i ∈ [0,1] is computed via lattice density functional theory (LDFT) at each relative humidity (RH). Periodic boundary conditions are used.

- LDFT formulation: At fixed RH, equilibrium densities {p_i} minimize the grand potential Ω({p}). The stationarity conditions yield an iterative update (Eq. 1) where p_i depends on p_j of nearest neighbors and solid–fluid and fluid–fluid interactions (w_mf, w_ff), with chemical potential μ=μ_sat+kT ln(RH). Parameters: T=298 K; coordination number c=4 (2D) or 6 (3D); w_ff≈4k_B T_c/c with T_c=647 K; interaction ratio y=w_mf/w_ff=1.5 (hydrophilic). Convergence criterion: average absolute change <1e−10 per iteration. RH swept from 0% to 100% with increment dRH=2.5% (40 points); at each step K, the converged {p_i} initializes step K+1. The desorption branch is computed by reversing RH points for hysteresis when needed.

- Differentiable simulator: Eq. (1) is decomposed into TensorFlow layers: input layers for {η}, {p}, and {1−η}; convolution (CONV) layers to compute neighbor sums W_ij p_j and W_ij (1−η_j); and an output layer applying the remaining elementwise transformation to yield updated p_i=f(p_i). For each RH, this three-layer block is repeated M times (M convolutional iterations) to mimic iterative fixed-point updates; M is a hyperparameter (accuracy improves with M; M=100 yields near-reference accuracy). All operations are differentiable, enabling end-to-end backpropagation through RH steps and iterative layers.

- Generator–simulator pipeline: Input is a target adsorption isotherm P_w of length 40 (or hysteresis of length 80). The generator comprises dual parallel deconvolution branches to capture low-RH and high-RH features, respectively receiving half of the input signal. Each branch: (i) Dense layer (2D: 20×20×64=25,600 neurons; 3D: 10×10×10×8=8,000), (ii) Reshape (2D: 20×20×64; 3D: 10×10×10×8), (iii) Deconvolution (2D: 64 channels, 20×20 filter; 3D: 8 channels, 10×10×10 filter), (iv) Convolution (single-channel 3×3 filter for 2D or 3D). Activations are ReLU with batch normalization after each layer. The two branch outputs are concatenated and passed to a final convolutional output layer with a binary sigmoid activation to produce a porous matrix prediction η_ij (2D 20×20) or η_ijk (3D 10×10×10). The predicted matrix is input to the differentiable simulator (with fixed, hard-coded physical convolution weights) to compute the forward adsorption (and desorption if applicable) curves.

- Training objective and optimization: Loss L is the percentage area between target and simulated curves (adsorption only, or adsorption+desorption for hysteresis). A regularization term encourages phase continuity by penalizing solid–pore neighbors: sum (η_i−η_j)^2 normalized by 4N^2 (2D) or 6N^3 (3D). Optimizer: SGD with momentum 0.9; initial learning rate 1e−2 decayed by 0.1 after patience of 10 epochs. Batch sizes: GPU training uses 64; TPU uses 256 for acceleration. Training runs 100 epochs with 1,000 batches per epoch.

- Data: Training set of 6,400,000 target curves generated procedurally to be monotonic and diverse in trend/convexity (20% stepwise; 80% anchor-based). Hysteresis targets are similarly constructed with constraints to ensure physical ordering. Test/validation set consists of 8,769 real sorption curves generated by the conventional LDFT simulator on diverse random grids produced via particle swarm optimization.

- Hardware benchmarking: Implemented in TensorFlow for execution on TPU-v3-8 (8 cores, 16 GB/core) and NVIDIA A100 GPU (40 GB). Benchmarked training time per batch versus grid size N and batch size, for 2D (N up to 120+) and 3D (N up to 30 TPU, 20 GPU). Out-of-memory boundaries mapped in (N, batch size) space for 2D and 3D.

- 3D generalization: 2D convolution with 4 neighbors replaced by 3D convolution with 6 neighbors. Differentiable 3D simulator validated against conventional 3D LDFT. Generator adapted to output 10×10×10 grids; training protocol mirrored the 2D case.

Key Findings

- Differentiable simulator accuracy: On a validation set of 8,769 porous matrices, increasing the number of convolution iterations M improves accuracy; at M=100, the average percentage loss <L> is approximately 0.36% across the full range of sinuosity indices, matching the conventional (non-differentiable) simulator.

- End-to-end generator performance (2D): Trained on 6.4 million procedurally generated targets; evaluated on 8,769 real curves. Test loss decreases with epochs and plateaus after ~50 epochs; after 100 epochs, average test loss is about 3.30% (reported also as ~3%). Generated porous matrices display realistic pore size distributions aligning with target low-/high-RH saturation behavior.

- TPU acceleration (2D): On TPU-v3-8, training can be over 4× faster than A100 GPU for large batch sizes and grid sizes (e.g., N=20 with large batches). At batch size 64, no TPU advantage observed. TPU exhibits smaller out-of-memory regions than GPU, enabling larger accessible (grid size, batch size) configurations.

- 2D to 3D translation: Mapping 2D designs to equivalent 3D representations yields an average translation loss of ~14.8% across sinuosity indices, indicating similar trends but nontrivial differences between 2D and 3D sorption behavior.

- Differentiable 3D simulator: With M=100, the differentiable 3D simulator closely matches the conventional 3D LDFT, with an average loss of ~0.88% on validation.

- 3D generator performance: For N=10 3D grids, average test loss after 100 epochs is ~2.93%, comparable to the 2D model.

- TPU acceleration (3D): For N=10, batch size 256, TPU offers modest speedup (~1.1× faster than A100). Maximum accessible grid sizes in 3D due to memory: up to N≈30 on TPU and N≈20 on GPU.

- Hysteresis inverse design: Extending the pipeline to full adsorption–desorption hysteresis inputs yields an average test loss of ~4.7%. The method captures realistic hysteresis constraints (e.g., non-arbitrary hysteresis due to metastability).

Discussion

The results demonstrate that reformulating a physics-based sorption simulator (LDFT) as a differentiable TensorFlow model enables direct, end-to-end training of a generative model for inverse design without relying on a learned surrogate predictor. This addresses core challenges of inverse design pipelines: the computational cost and non-differentiability of traditional simulators and the extrapolation limits of surrogates. The differentiable simulator achieves accuracy on par with a conventional reference while enabling gradient backpropagation through thousands of layers and across RH steps. The generator trained solely against the differentiable simulator attains low error on thousands of real target curves, generalizes to 3D, and handles complex targets like hysteresis. Leveraging TPUs further reduces training time and expands memory headroom, highlighting their promise for accelerating scientific simulations beyond standard deep learning tasks. Collectively, these findings validate an effective strategy for integrating differentiable physics into inverse materials design, potentially improving discovery efficiency and enabling exploration beyond the confines of dataset-driven surrogates.

Conclusion

This work introduces an end-to-end differentiable inverse design framework that couples a hard-coded physics-informed LDFT sorption simulator with a deep generator in TensorFlow and accelerates training using TPUs. The differentiable simulator reproduces conventional results with negligible loss, enabling gradient-based optimization of a generator that produces porous matrices matching arbitrary target sorption isotherms, including hysteresis, in both 2D and 3D. Benchmarks show substantial TPU speedups and improved memory scalability under appropriate settings. The main contributions are: (i) a differentiable reformulation of LDFT sorption on lattice grids, (ii) seamless generator–simulator integration avoiding surrogates, (iii) demonstration of inverse design accuracy on large test sets, (iv) generalization to 3D and hysteresis, and (v) evidence that TPUs can accelerate scientific simulations. Future directions include scaling to larger and more realistic 3D systems, incorporating additional physics (e.g., multicomponent adsorption, temperature dependence), extending to other materials properties and simulators, exploring multi-objective design, and leveraging next-generation accelerators (e.g., newer TPU/GPU architectures) to expand accessible design spaces.

Limitations

- Model abstraction: The 2D lattice model is a simplified proxy for real 3D porous materials; while correlated, translation from 2D to 3D incurs a nontrivial average loss (~14.8%).

- Computational cost and memory: The pipeline is deep (e.g., ~4,000 convolution layers per sample for 2D) and training is resource-intensive. 3D models substantially increase cost and can hit memory limits; TPU and GPU have different out-of-memory boundaries.

- Hardware dependence: TPU speedups rely on large batch sizes and matrix-friendly workloads; at smaller batches (e.g., 64), gains diminish compared to highly parallel GPUs.

- Physics scope: The LDFT formulation uses simplified interactions and parameters (e.g., fixed y=w_mf/w_ff, isothermal conditions). Real materials may require richer physics (heterogeneity, multiscale effects, multicomponent fluids).

- Design constraints: Hysteresis is constrained by metastability; not all target hysteresis curves are physically realizable, limiting arbitrary inverse design of such behaviors.

- Generalizability: Although trained against the simulator (not a surrogate), performance depends on simulator fidelity; systematic simulator biases would propagate to designs.

Related Publications

Explore these studies to deepen your understanding of the subject.