Medicine and Health

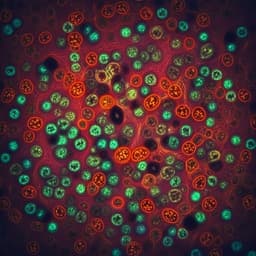

Deep learning-based virtual staining, segmentation, and classification in label-free photoacoustic histology of human specimens

C. Yoon, E. Park, et al.

Discover a groundbreaking deep learning framework for automated virtual staining, segmentation, and classification in label-free photoacoustic histology of human specimens. Researchers Chiho Yoon, Eunwoo Park, Sampa Misra, Jin Young Kim, Jin Woo Baik, Kwang Gi Kim, Chan Kwon Jung, and Chulhong Kim achieved remarkable accuracy in classifying liver cancers, establishing new potential for digital pathology.

Related Publications

Explore these studies to deepen your understanding of the subject.