Medicine and Health

Deep learning-based virtual H&E staining from label-free autofluorescence lifetime images

Q. Wang, A. R. Akram, et al.

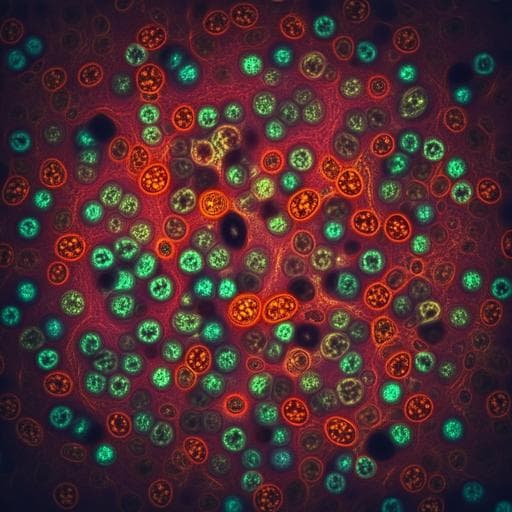

Fluorescence lifetime is characterized by decay from the excited state to the ground state and is independent of intensity but sensitive to the microenvironment. FLIM provides insights into metabolic state, pathology, and microenvironmental factors (e.g., pH, ion concentration, molecular interactions) by analyzing endogenous fluorescence. Conventional FLIM analysis relies on statistical methods (e.g., histograms, phasor analysis) and often requires references such as histology. These approaches reveal averaged lifetimes of dominant components, not cellular-level information, and co-registering FLIM with histology can be challenging due to tissue preparation-induced changes. Reference histology is often unavailable alongside FLIM, limiting immediate interpretation. Advances in deep learning have enabled virtual histological staining from various label-free modalities, with U-Net-based GANs and pix2pix showing success in translating autofluorescence or other label-free images to H&E and other stains. Building on this, the study aims to generate virtual H&E staining directly from label-free FLIM images using a supervised pix2pix GAN augmented with the Deep Image Structure and Texture Similarity (DISTS) loss. The goals are to enable rapid, accurate cellular-level interpretation of FLIM, compare input representations (intensity vs. lifetime-inclusive formats), and evaluate across multiple cancer types (lung, colorectal, endometrial).

Prior work has demonstrated deep learning-enabled virtual histological staining from label-free images. Rivenson et al. translated autofluorescence intensity images to multiple stains using supervised GANs with total variation regularization. Li et al. used pix2pix to synthesize H&E and other stains from bright-field images of unstained carotid artery tissue. Borhani et al. used a custom model to generate virtual H&E from multi-modal two-photon fluorescence (16-channel spectral intensity + 1-channel lifetime). CycleGAN has been used to map ultraviolet photoacoustic microscopy to virtual H&E. Other efforts include transferring H&E to virtual IHC and IHC to virtual multiplex immunofluorescence. Compared to these, the present study leverages FLIM with data-driven optimal excitation/emission settings and explicitly incorporates lifetime information, training a supervised pix2pix model with DISTS loss for improved structure and texture fidelity.

Data and imaging: Surgically resected, early-stage non-small cell lung carcinoma samples were used to construct TMAs; additional primary colorectal and endometrial cancers and FFPE lung biopsies were included. FLIM imaging was performed on a Leica STELLARIS 8 FALCON confocal FLIM microscope with 20x/0.75 NA objective. Optimal excitation/emission were determined via λ-to-λ spectral scan: excitation 485 nm; emission 500–720 nm. Image size 512×512 pixels with various pixel sizes. Post-FLIM, the same samples were stained with H&E and digitized on a ZEISS Axio Scan.Z1 (20x/0.75 NA), pixel size 0.22 µm. Datasets: 84 lung cancer samples (69 TMA cores at 0.455 µm, 5 biopsies at 0.4 µm, and 10 TMA cores from a separate cohort at 0.25 µm), 4 colorectal cancer slides (0.4 µm), and 4 endometrial cancer sections (0.4 µm). For training/testing: 72 lung samples (70 TMA cores, 2 biopsies) for training; 15 independent lung sections (12 TMA cores, 3 biopsies) for testing. Colorectal and endometrial were trained via transfer learning on 2 sections and evaluated on 2 separate sections. Preprocessing and registration: FLIM lifetimes estimated by exponential fitting of photon histograms (Leica LAS-X), exported as separate intensity and lifetime images. Raw intensity clipped to [0,2000], lifetime to [0.0,5.0] ns. Tiles per TMA core stitched using MIST (intensity channel only). Intensity images color-inverted for white background. H&E images converted to grayscale and contrast-enhanced (1% saturation at low/high bounds). Co-registration between FLIM intensity and processed H&E performed with affine transformation (MATLAB); elastic registration found unnecessary, with comparable results using affine only. H&E images were downsampled to match FLIM pixel size (e.g., from 0.22 µm to ~0.455 µm) via bicubic interpolation. Patch extraction: Co-registered pairs were patched into 256×256 pixels; patches with >75% background discarded. Intensity images were globally normalized per TMA core before application to lifetime images. Input representations compared: (1) Greyscale autofluorescence intensity images. (2) False-color lifetime RGB images with normalized intensity appended as alpha (α-FLIM; 4 channels). False-color mapping used a fixed lifetime range [1.0, 5.0] ns. (3) Intensity-weighted false-color lifetime (IW-FLIM): normalized intensity used as a soft weight, pixel-wise multiplied into each channel of the false-color lifetime RGB. Although α-FLIM and IW-FLIM appear visually identical, they differ in channel composition. Model: Conditional GAN (pix2pix). Generator: U-Net-like; Discriminator: multi-layer conditional classifier. Losses: adversarial loss L(G,D) and L1 reconstruction loss L(G), combined as L = α L(G,D) + β L(G). Additional DISTS loss integrated to capture structure and texture similarity using VGG feature maps with learnable weights η and θ, combining texture T and structure S components. Final objective: L_total = α L(G,D) + β L(G) + λ L_DISTS, with α=0.1, β=1, λ=5. Training: Implemented in PyTorch with Adam optimizer (β1=0.5, β2=0.999). Initial learning rate 1e-4, decayed by ×0.1 every 60 epochs; total 300 epochs. Data augmentation: horizontal flips and ±15° rotations. Training executed on NVIDIA V100 GPUs (EPSRC Tier-2 Cirrus). During inference, models accept variable input sizes beyond 256×256 patches. Evaluation: Qualitative synthesis of virtual H&E from FLIM across lung, colorectal, endometrial cancers and FFPE lung biopsies. Blinded evaluation by three experienced pathologists on 12 lung TMA cores: four criteria (nuclear detail via hematoxylin, cytoplasmic detail via eosin, overall staining quality, diagnostic confidence) scored 1–4. Quantitative metrics comparing virtual vs. true H&E for different input formats: NRMSE (lower better), NMI (as reported; study notes smaller NRMSE and NMI indicate more similarity), PSNR (higher better), MSSIM (higher better). Cellular lifetime signature analysis: pathologist-annotated seven cell types on virtual H&E, mapped to FLIM to compute pixelwise lifetime histograms and peak/average lifetimes per cell type.

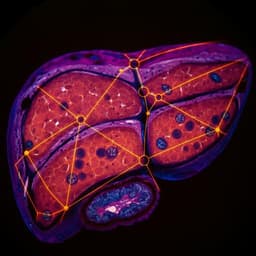

- Virtual H&E from FLIM: The method accurately reconstructs morphological and textural attributes of diverse lung TME cell types (tumor cells, stromal fibroblasts, inflammatory cells, RBCs), matching corresponding true H&E images, including on complex tissue mixtures.

- Blind pathologist evaluation (12 lung TMA cores): Mean scores across nuclear detail, cytoplasmic detail, overall quality, and diagnostic confidence showed only marginal differences between virtual and true H&E, with virtual images achieving outstanding quality. In one case (sample 6), all pathologists preferred the virtual image over the true across all four metrics.

- Generalizability to other tissues: On colorectal and endometrial adenocarcinomas, virtual H&E exhibited strong consistency with true H&E, accurately reconstructing most cellular details suitable for visual and quantitative analysis. Shorter lifetimes in endometrial samples (visually dimmer FLIM) did not degrade virtual staining.

- FFPE lung biopsy: Virtual H&E maintained diagnostic adequacy, though performance was less remarkable than on TMA slides.

- Lifetime signatures of seven cell types in lung TME (average lifetimes): RBC 0.251 ns; fibroblasts 0.376 ns; tumor cells 0.424 ns; lymphocytes 0.471 ns; neutrophils 0.659 ns; plasma cells 0.690 ns; macrophages 2.420 ns. RBCs had the shortest lifetimes (difficult to discern in FLIM alone); macrophages had the longest (easily identifiable). Plasma cells and neutrophils had similar lifetimes; lymphocytes less visually distinguishable; tumor cells and fibroblasts had shorter lifetimes and appeared dimmer.

- Input format comparison (lung samples): Pathologist review found all three inputs (intensity, α-FLIM, IW-FLIM) produced satisfactory virtual H&E for detection/diagnosis, but intensity-based reconstructions missed macrophage clusters and misclassified some immune cells. α-FLIM and IW-FLIM handled these better; IW-FLIM was optimal.

- Quantitative metrics (Table 1): • Lung sample 1: Intensity NRMSE 0.127, NMI 1.093, PSNR 20.085, MSSIM 0.529; α-FLIM NRMSE 0.127, NMI 1.091, PSNR 20.121, MSSIM 0.530; IW-FLIM NRMSE 0.126, NMI 1.096, PSNR 20.202, MSSIM 0.533. • Lung sample 2: Intensity NRMSE 0.095, NMI 1.154, PSNR 22.767, MSSIM 0.689; α-FLIM NRMSE 0.093, NMI 1.161, PSNR 22.962, MSSIM 0.695; IW-FLIM NRMSE 0.092, NMI 1.164, PSNR 23.026, MSSIM 0.697. Overall, lifetime-inclusive formats outperform intensity-only; IW-FLIM yields the best or competitive metrics and visual fidelity.

The study addresses the challenge of rapidly interpreting FLIM without paired histology by generating virtual H&E images directly from FLIM, integrating both intensity and lifetime information. Incorporating lifetime markedly improves reconstruction fidelity over intensity-only inputs, enabling accurate depiction of cellular components and preserving diagnostically relevant nuclear and cytoplasmic details. The strong agreement of blinded pathologist scores between virtual and real H&E supports clinical-grade quality and diagnostic confidence. Lifetime-informed virtual staining also enables immediate mapping of histological identities to lifetime distributions, facilitating identification of cell-type-specific lifetime signatures without separate staining or difficult co-registration steps. Compared to prior virtual staining approaches that rely on autofluorescence intensity at fixed filter sets or multi-modal systems, this work leverages FLIM with optimal excitation/emission determined by λ-to-λ scans and augments a supervised pix2pix framework with DISTS loss to better preserve structure and texture. The comparison of input representations demonstrates that adding lifetime is critical for correctly reconstructing immune cell populations (e.g., macrophages) and reducing misclassification. The method generalizes to colorectal and endometrial cancers and remains clinically useful on FFPE biopsies. Practical considerations include scan time and complexity of FLIM acquisition; however, optimization reduced scan times substantially while maintaining quality, and FLIM’s added metabolic information and label-free nature provide complementary value. The work suggests that with standardized sample handling, simple affine co-registration suffices, improving reproducibility. Finally, while alternative generative models (e.g., ResVit, DDGAN) underperformed without tailored losses, incorporating perceptual/texture-aware objectives like DISTS is key for high-quality synthesis.

This work presents a supervised deep learning framework (pix2pix with DISTS loss) to generate clinical-grade virtual H&E staining from label-free FLIM, capitalizing on lifetime information to improve accuracy over intensity-only methods. The approach yields virtual images with diagnostic quality comparable to true H&E across lung, colorectal, and endometrial cancers, and enables immediate extraction of distinct lifetime signatures for multiple cell types in the tumor microenvironment. The study demonstrates that intensity-weighted false-color lifetime (IW-FLIM) is the most suitable input representation among those tested. By reducing dependence on arduous co-registration and providing rapid, label-free morphological and phenotypic characterization, this method expands the utility of FLIM in research and potential clinical workflows. Future directions include: exploring more advanced generative architectures combined with appropriate perceptual/texture losses; broader validation across tissue types and institutions; improving performance on biopsy samples; optimizing acquisition for speed/throughput; and integrating lifetime-informed virtual staining into downstream computational pathology and biomarker discovery pipelines.

- FLIM acquisition is more complex and time-consuming than routine H&E; although scan times were reduced from ~40 min to <15 min per TMA core with optimization (and to ~30 min at higher resolution), throughput remains a consideration.

- Performance on FFPE lung biopsies was lower than on TMA slides, though still diagnostically adequate.

- The approach relies on supervised training with paired FLIM–H&E data and co-registration (albeit affine suffices under the described protocol), which may limit deployment where such pairing is unavailable.

- Intensity-only inputs can miss or misclassify certain immune populations; while lifetime-inclusive formats address this, subtle misreconstructions may persist in challenging regions.

- Some advanced generative models failed without tailored loss design, indicating sensitivity to training objectives and the need for careful loss engineering.

- FLIM imaging settings (e.g., lifetime range mapping, excitation/emission optimization) and normalization choices may influence generalizability across instruments and sites.

Related Publications

Explore these studies to deepen your understanding of the subject.