Agriculture

Training instance segmentation neural network with synthetic datasets for crop seed phenotyping

Y. Toda, F. Okura, et al.

The study addresses the challenge of obtaining large annotated datasets required for training deep learning models in plant phenotyping. Manual labeling is labor-intensive and often the bottleneck for deploying computer vision pipelines, especially for tasks like instance segmentation where seeds may touch or overlap. The authors hypothesize that a neural network for instance segmentation of crop seeds can be effectively trained using purely synthetic images generated via domain randomization, thereby eliminating the need for extensive manual annotations. The purpose is to demonstrate effective training for barley seed morphology and to show generalizability to other crops, enabling high-throughput, accurate phenotyping from densely arranged seeds.

The paper situates the work within deep learning applications in agriculture (weed detection, disease diagnosis, fruit detection). It reviews the high annotation demands of datasets like ImageNet and COCO versus smaller specialized datasets in agricultural tasks. It summarizes sim2real approaches using synthetic data to avoid manual annotation, including synthetic human models for pose estimation and plant image analysis with synthetic plant models for branching and leaf segmentation. It discusses the sim2real reality gap and methods to reduce it using GANs (e.g., StyleGAN for plant disease classification) and introduces domain randomization: training with large variations in synthetic images (camera, lighting, textures) to improve generalization. In plant phenotyping, prior domain randomization-like methods have been used for leaf segmentation and counting across species using synthetic leaves. The need for instance segmentation (vs. simple thresholding or watershed) is emphasized for densely touching seeds, as prior tools often require sparse seed placement.

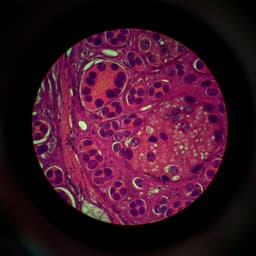

Data acquisition: Barley seeds from 20 accessions (19 domesticated, 1 wild) were threshed and scanned on an EPSON GT-X900 at 600 dpi (7019×5100 px) on a blue background. Additional species (rice, oat, lettuce, wheat cultivars) were scanned with a ScanSnap SV600 at 300 or 600 dpi (3508×2479 px).

Synthetic dataset generation (domain randomization): From 20 cultivars, 20 single-seed images each (total 400) were isolated. Backgrounds were removed (non-seed pixels set to RGB 0,0,0) to build a seed image pool. Four 1024×1024 background crops formed a background pool. For each synthetic image: a background was pasted onto a 1024×1024 canvas; a seed from the pool was randomly selected, rotated by a random angle, and placed at random coordinates constrained to stay within the canvas. Alpha masks ensured transparent backgrounds and Gaussian-blurred seed perimeters to reduce artifacts. Overlap between placed seeds was limited: if overlap area ratio exceeded 0.25, placement was rejected and retried (up to 70 trials per image). Instance masks were generated simultaneously by assigning unique colors to each placed seed; overlapping regions in masks were updated by the foreground seed. To mimic border-truncated seeds, borders were cropped to yield final 768×768 images. The final synthetic dataset comprised 1200 image-mask pairs for training/validation and 200 additional images for a synthetic test set.

Neural network and training: Mask R-CNN with a ResNet-101 FPN backbone (Keras/TensorFlow implementation) initialized from MS COCO weights was fine-tuned on the synthetic dataset for 40 epochs using SGD (learning rate 0.001, batch size 2). Of the 1200 synthetic images, 989 were used for training, 11 for validation, and 200 for the synthetic test set. No data augmentation was applied. Input size at inference was variable; outputs included bounding boxes, instance masks, and class scores. A score threshold of 0.5 was used to select final masks.

Evaluation datasets and metrics: A real-world test dataset comprised 20 images (one per barley cultivar), 2000×2000 px, each containing seeds from a homogeneous population. Ground-truth bounding boxes and masks were manually annotated (Labelbox). Metrics included Recall at 50% IoU for bounding boxes (Recall50) and Average Precision (AP) using mask IoUs: AP50, AP75, and AP@[.5:.95] (mean AP over IoU thresholds 0.5 to 0.95 at 0.05 steps).

Post-processing: To select isolated, integral seeds for phenotyping, raw detections were filtered heuristically: remove detections with bounding boxes within a 5 px margin of image boundaries; remove low-solidity outliers using the 25% lower quantile per image; remove extreme length-to-width ratio outliers using the 5% lower and 95% upper quantiles. This step eliminated partially occluded seeds, incomplete masks, debris, or edge-cut seeds.

Morphological analysis: From masks, seed area, width, length, perimeter, circularity, eccentricity, solidity, and length-to-width ratio were computed (scikit-image regionprops). Statistical comparisons used one-way ANOVA with Tukey HSD.

Contour-based descriptors: Elliptic Fourier Descriptors (EFD) were computed from binary contours with 20 harmonics and normalization for rotation/size invariance (pyefd). The 77 informative coefficients (excluding the first three) were used for PCA.

Representation learning: A convolutional Variational Autoencoder (VAE) with 4 encoder conv layers (filters 32, 64, 128, 256) and symmetric decoder deconvs (256, 128, 64, 32) mapped 256×256×3 inputs to a 2D latent space (Gaussian prior). The 2D latent variables were used for visualization and interpolation; reconstructions captured shape, size, texture/brightness features.

Cross-species application: Independent synthetic datasets were generated for wheat, rice, oat, and lettuce, and separate models were trained and evaluated visually on real images.

- Instance segmentation performance (Mask R-CNN trained purely on synthetic data): • Synthetic test dataset: Recall50 = 0.95; AP@[.5:.95] = 0.73; AP50 = 0.96; AP75 = 0.93. • Real-world barley test dataset (20 cultivars, average over images): Recall50 = 0.96; AP@[.5:.95] = 0.59; AP50 = 0.95; AP75 = 0.86. Per-cultivar Recall50 ranged 0.87–1.00; AP50 ranged 0.78–0.99.

- Visual results showed accurate detection and separation of seeds even when touching or overlapping; the model trained on manual annotations alone performed poorly on dense clusters (notably improved by domain randomization).

- Post-processed seed area distributions shifted from long-tailed to approximately normal within cultivars; inferred seed area correlated strongly with manual ground truth (Pearson r = 0.97), with 96.8% of seeds within 10% error.

- Barley morphology across 19 cultivars (4464 seeds; ~235 per cultivar): differences detected in area, width, length, and length-to-width ratio with ANOVA/Tukey significance.

- PCA on eight morphological descriptors: PC1+PC2 explained 88.5% variance; cultivars formed distinguishable clusters; length and perimeter loaded on PC1 opposite circularity; width and length-to-width ratio influenced PC2; length-width correlation r ≈ 0.5 (p < 0.01).

- EFD-PCA captured contour shape but with intermixed clusters due to size normalization; PC1 reflected slenderness; PC2 associated with longitudinal edge sharpness.

- VAE latent space provided clearer cultivar clustering than PCA on handcrafted features; latent dimension Z1 associated with brightness/color and size, Z2 with seed length.

- Generalization: Models trained with species-specific synthetic datasets successfully segmented real images of rice, wheat, oat, and lettuce across varying sizes, shapes, textures, and backgrounds.

The findings demonstrate that domain-randomized synthetic images can effectively train an instance segmentation network that generalizes to real-world seed images, addressing the bottleneck of manual annotation. High Recall50 and AP50 on real data indicate reliable object localization and adequate mask quality for phenotyping, despite lower AP at stricter IoUs likely influenced by mask annotation variability. The approach excels in separating touching seeds, a failure point for traditional thresholding/watershed or models trained on limited manual annotations. Robust post-processing further ensures selection of integral seeds, enabling accurate downstream morphological analyses at scale. The study highlights that for seed instance segmentation, capturing diverse orientations and spatial interactions in synthetic data is more critical than exhaustive texture variety. Multivariate analyses (PCA, EFD-PCA) and learned representations (VAE) corroborate the pipeline’s capacity to extract biologically meaningful variation among cultivars, facilitating sensitive detection of subtle phenotypic differences. Cross-species applicability suggests the method’s general utility in agricultural phenotyping. Overall, the synthetic-data-first strategy reduces human labor, accelerates pipeline development, and supports large-scale, statistically rigorous phenotyping, positioning it as a practical solution for studies like GWAS, QTL mapping, and mutant screening.

The study introduces a sim2real, domain-randomization pipeline to train Mask R-CNN for crop seed instance segmentation using only synthetic images. It achieves strong detection and segmentation on real barley images (Recall50 0.96; AP50 0.95) and generalizes to multiple crop species. The pipeline automates high-throughput phenotyping, accurately quantifying morphology from densely arranged seeds and enabling comprehensive multivariate analyses and representation learning. Future work should optimize synthetic data parameters (image resolution, diversity, dataset size), incorporate additional augmentations (color shift, zoom), and explore integrating integrity classification to reduce post-processing. Extending domain randomization to more complex scenes (e.g., spikelets with glumes/awns) and heterogeneous populations, and coupling with genetic studies, can further expand applicability and biological impact.

- Potential sim2real gap: AP at higher IoUs (AP75, AP@[.5:.95]) is lower on real data than synthetic, partly due to subtle variability in manual mask annotations; synthetic test performance may be inflated by shared seed textures (data leak) despite differing orientations/combinations.

- Dependence on data parameters: Model performance is influenced by image resolution, seed image variance in the synthetic pool, and training dataset size; optimal settings may vary by cultivar/species and were not exhaustively tuned.

- Post-processing assumptions: Heuristic filtering assumes homogeneous seed populations; applicability to heterogeneous populations needs validation. The approach excludes occluded/edge-cut seeds, potentially biasing analyses.

- Interpretability: The network remains a black box; feature importance underlying generalization from synthetic to real is not explicitly verified.

- Limited augmentation: No additional color/scale augmentations were used during training; robustness to varied imaging conditions may be improved with more diverse augmentations.

Related Publications

Explore these studies to deepen your understanding of the subject.