Medicine and Health

SynthVision - Harnessing Minimal Input for Maximal Output in Computer Vision Models using Synthetic Image Data

Y. Kularathne, P. Janitha, et al.

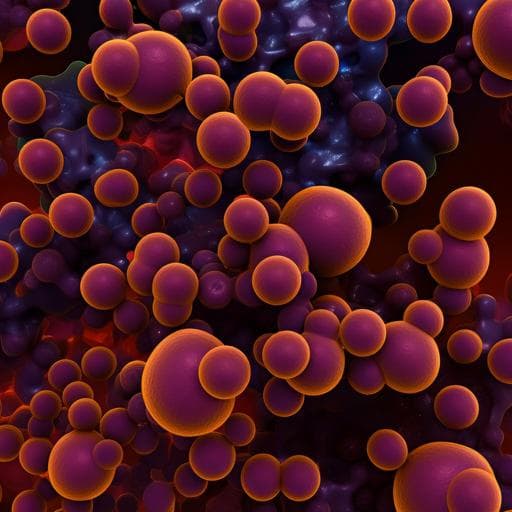

Discover SynthVision, an innovative approach developed by Yudara Kularathne, Prathapa Janitha, Sithira Ambepitiya, Thanveer Ahamed, Dinuka Wijesundara, and Prarththanan Sothyrajah to create disease detection models. With just 10 real images, this method generated 500 clinically validated synthetic images to accurately detect HPV genital warts, achieving remarkable precision and recall. This groundbreaking technique could revolutionize rapid medical responses.

Related Publications

Explore these studies to deepen your understanding of the subject.