Engineering and Technology

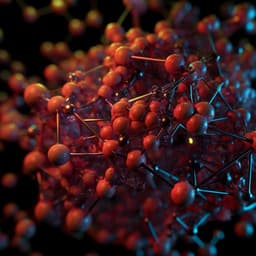

Scaling deep learning for materials discovery

A. Merchant, S. Batzner, et al.

This groundbreaking research by Amil Merchant, Simon Batzner, Samuel S. Schoenholz, Muratahan Aykol, Gowoon Cheon, and Ekin Dogus Cubuk shows how scaled graph neural networks can revolutionize materials discovery by uncovering 2.2 million new stable structures from a dataset of 48,000 crystals. This includes complex materials with unique elemental combinations never found before!

Playback language: English

Related Publications

Explore these studies to deepen your understanding of the subject.