Biology

ProtGPT2 is a deep unsupervised language model for protein design

N. Ferruz, S. Schmidt, et al.

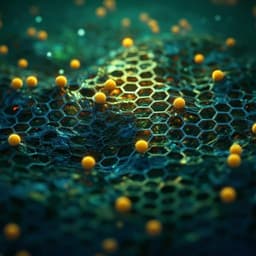

Discover ProtGPT2, a groundbreaking language model developed by Noelia Ferruz, Steffen Schmidt, and Birte Höcker, that generates innovative protein sequences. These novel sequences maintain natural amino acid preferences and emerge from previously unexplored protein spaces, yielding well-folded structures of unique complexities. Rapid generation and public accessibility make this a significant advancement in protein research.

Playback language: English

Related Publications

Explore these studies to deepen your understanding of the subject.