Engineering and Technology

Non-orthogonal optical multiplexing empowered by deep learning

T. Pan, J. Ye, et al.

The study addresses the capacity limitations imposed by the traditional orthogonal paradigm in optical multiplexing (e.g., space, wavelength, polarization, and mode division multiplexing). The research question is whether non-orthogonal optical multiplexing—where channels share polarization, wavelength, and spatial position—can be achieved and reliably demultiplexed from a single intensity speckle after propagation through a multimode fiber (MMF). Conventional inverse transmission matrix methods fail for non-orthogonal inputs with identical polarization and wavelength when only single-shot intensity is available. The authors propose a deep learning-based approach (SLRnet) to learn the inverse mapping from the speckle intensity to the underlying multiple non-orthogonal input light fields, aiming to overcome the ill-posed nature of this problem and enable higher-capacity optical multiplexing beyond orthogonality. The work is motivated by the lack of effective demultiplexing methods or the burden of heavy digital signal processing for non-orthogonal channels.

The paper situates its contribution within two lines of prior work: (1) Optical multiplexing traditionally relies on orthogonal channels, including space-, wavelength-, polarization-, and mode-division multiplexing, which inherently cap capacity. The transmission matrix method enables demultiplexing over scattering media such as MMFs but presumes orthogonality or separate degrees of freedom. (2) Deep learning has been widely used in optics for inverse design and computational imaging, and more recently to enhance orthogonal multiplexing performance through multiple scattering media and MMFs. However, the literature has not addressed non-orthogonal multiplexing where channels share polarization, wavelength, and spatial position, leaving open the problem of decoding multiple-to-one mappings from single-shot intensity data. The authors highlight residual optical asymmetries and MMF multiple scattering as potentially exploitable features by data-driven models to resolve such inverse mappings.

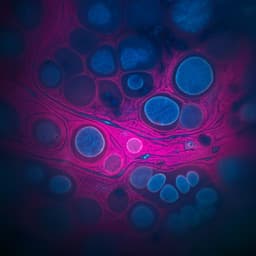

Principle: The MMF single-channel input-output relationship is modeled as E_out = T E_in, where T is the transmission matrix. For multiple non-orthogonal input fields with the same wavelength and potentially the same polarization and spatial region, the input is E_in = Σ_i E_i with E_i = a A_i(x,y) exp(i φ_i(x,y)). The camera measures intensity I = |Σ_i T E_i|^2, defining an unknown mapping H from the set of input amplitude/phase pairs C_n to the speckle I. For n > 1, an analytic inverse H^-1 is unavailable with single-shot intensity, motivating a data-driven approximation R_θ ≈ H^-1 learned via supervised training to recover C_n from I. Neural network: The proposed SLRnet is a Unet variant (ResUNet) preceded by a fully connected (FC) block. The FC layer enhances fitting/generalization and helps undo nonlocal dispersion induced by the MMF. The ResUNet comprises residual convolutional (ResConv) downsampling blocks, residual transposed convolutional (ResConvT) upsampling blocks, abundant skip connections between symmetric encoder/decoder stages, and a 1×1 output Conv for channel compression. Batch normalization and ReLU activations are used. The network outputs four maps corresponding to amplitude and phase of two input light fields (n=2) representing four encoded channels. The labeling ensures orthogonal supervision of non-orthogonal channel information. Data and encoding: Input images are encoded into amplitude and phase using a phase-only SLM via a complex-amplitude hologram method that modulates amplitude through spatial diffraction efficiency with 4f filtering to select the first-order diffraction. Amplitude images are scaled from 0–1 to 0.2–1. Speckle intensities recorded at the MMF distal end are resized to 200×200 for network input. Datasets include Fashion-MNIST for primary demonstrations and additional tests with ImageNet natural images, CelebA faces, uncorrelated random binary data, and out-of-distribution Muybridge snapshots. Experimental setup: A 532 nm, 50 mW laser (horizontally polarized) is split into two beams of equal size. A phase-only SLM generates amplitude+phase modulations; a 4f system (L2–iris–L3) selects the first-order diffracted beam. A wave plate sets the polarization of one beam while the other remains horizontal. The two beams are combined with a non-polarizing beamsplitter to form collinear beams with arbitrary polarization combinations, then coupled into a step-index MMF (two variants tested: 1 m, ϕ=400 µm, NA=0.22; and 50 m, ϕ=105 µm, NA=0.22). Output speckle is collected with an objective and recorded by a CMOS camera, then converted to grayscale images for training/validation. Training: Implemented in PyTorch 1.13.0 (Python 3.9.13). Optimizer: AdamW. Learning rate schedule: initial 2×10^-4, warm-up to 1×10^-3 after 5 epochs, cosine annealing to 0 by the last epoch. Loss: mean absolute error. Total epochs: 200. Data split: 90% training, 10% validation with uniform sampling. Validation metrics include Pearson correlation coefficient (PCC) and SSIM.

- SLRnet successfully demultiplexes non-orthogonal input channels (two light fields) from a single-shot speckle, even when channels share the same polarization, wavelength, and input spatial region.

- Across 12 polarization combinations (linear, circular, elliptical) over a 1 m MMF, validation PCC exceeds 0.97 after 100 epochs, with average retrieved fidelity ~0.98 for both amplitude and phase channels.

- Visual reconstructions demonstrate high-quality retrieval for cases including identical polarizations (0° & 0°), 0° & 10°, 0° & 90°, and 0° & elliptical; typical SSIM/PCC values for Fashion-MNIST amplitude/phase reconstructions approach ~0.95–0.99.

- Over a 50 m MMF with same polarization channels, demultiplexing remains effective though degraded relative to 1 m due to increased environmental sensitivity; representative SSIM/PCC values remain high (e.g., SSIM ~0.84–0.93, PCC ~0.91–0.98 across shown examples).

- Generalization to more complex content: For ImageNet natural images with amplitude+phase encoding, average SSIM/PCC = 0.737/0.905; with phase-only encoding, average SSIM/PCC = 0.819/0.945. For out-of-distribution Muybridge phase-only images, typical SSIM/PCC up to 0.907/0.986.

- Overall fidelity reported up to ~98% for multidimensional light-field retrieval, with performance comparable to or surpassing prior orthogonal multiplexing approaches in fidelity and spatial channel numbers.

The findings demonstrate that deep learning can approximate the inverse mapping from single-shot speckle intensity to multiple non-orthogonal input light fields transmitted through an MMF, overcoming the failure of inverse transmission-matrix methods in non-orthogonal scenarios. Leveraging residual optical asymmetries and MMF multiple scattering, SLRnet robustly decodes amplitude and phase information for two input light fields across arbitrary polarization pairs, including identical polarizations, effectively breaking the orthogonality constraint. The approach achieves high fidelity (~98%) and shows robustness to environmental perturbations present in both training and validation sets, with better stability for shorter fibers (1 m) than longer ones (50 m). The performance is comparable to or better than prior orthogonal multiplexing demonstrations, suggesting a path to higher-capacity optical systems using non-orthogonal channels. Scaling to more channels is feasible but will require larger datasets and potentially architectural advances or physics-informed priors to maintain fidelity and generalization.

This work introduces and validates non-orthogonal optical multiplexing over MMFs using a deep neural network (SLRnet) that retrieves multiple light fields’ amplitude and phase from a single-shot speckle. The method breaks the traditional orthogonality requirement and achieves high-fidelity (~98%) demultiplexing across diverse polarization combinations and content types, including general natural images and out-of-distribution samples. The approach paves the way for leveraging additional optical degrees of freedom and enhancing the capacity of optical communication and information processing through MMFs. Future research directions include: integrating more degrees of freedom (e.g., wavelength, orbital angular momentum), improving network architectures (e.g., transformer/self-attention, Fourier-prior networks), incorporating physics-informed models of MMF propagation to reduce data requirements and boost fidelity, and applying transfer learning and joint training across environmental conditions to enhance robustness and scalability.

- Current fidelity, while high, may not meet unity-fidelity requirements for applications such as medical diagnosis.

- Performance degrades with longer fibers (e.g., 50 m) due to increased environmental sensitivity of MMF scattering properties.

- Scaling to more than two input channels increases data requirements substantially; capacity growth can demand exponentially more training data.

- The method relies on supervised learning with paired speckle–wavefront datasets and may require retraining or adaptation for different setups or environmental changes, though joint/transfer learning can mitigate this.

Related Publications

Explore these studies to deepen your understanding of the subject.