Engineering and Technology

Machine learning enables design automation of microfluidic flow-focusing droplet generation

A. Lashkaripour, C. Rodriguez, et al.

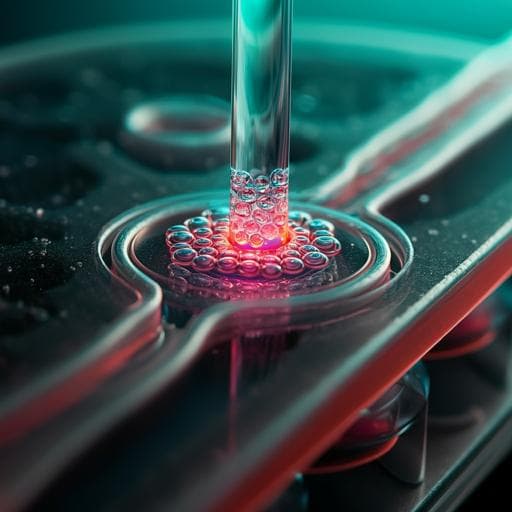

Miniaturized liquid handling is increasingly needed for high-sensitivity, high-throughput life science applications. While droplet microfluidics offers exceptional throughput, volume reduction, and sensitivity, widespread adoption is limited by the complex, poorly predictive nature of droplet formation, fabrication costs, and limitations of numerical modeling. Flow-focusing geometries provide broad performance ranges but lack generalizable analytical models due to many interacting parameters. Leveraging low-cost rapid prototyping, the authors aim to build large experimental datasets to train machine learning models capable of accurately predicting droplet diameter and generation rate from device geometry, flow conditions, and fluid properties. The research goal is to enable automated, application-driven design of droplet generators that meet user-specified performance, reducing expertise and iteration requirements.

Existing liquid handling approaches (robotic systems, digital microfluidics) face trade-offs in volume, throughput, and cost. Droplet microfluidics offers promising performance but is challenged by complex multiphase dynamics and sensitivity to geometry, flow, and fluid properties. Various generator geometries (T-junction, step-emulsification, co-flow, flow-focusing) have been studied, with flow-focusing allowing wider performance ranges. Prior scaling laws and phenomenological models provide insight but lack accuracy across broad parameter spaces for flow-focusing devices. Recent advances in rapid prototyping lower barriers to producing large datasets, creating an opportunity to apply machine learning for predictive modeling and design automation, a need unmet since the field’s inception.

- Device design and fabrication: Six geometric parameters define the flow-focusing droplet generator (orifice width and length, water inlet width, oil inlet width, outlet width, channel depth). Using desktop CNC micromilling and layered assembly, 43 unique devices were fabricated. Twenty-five devices were selected via an orthogonal Taguchi design; 18 were added iteratively during tool verification.

- Fluids and flow conditions: Continuous phase was NF 350 mineral oil with 5% Span 80; dispersed phase was DI water (colored for visualization). Devices were tested across 65 unique combinations of capillary number and flow rate ratio, yielding 998 experiments (minimum 1 to maximum 34 flow conditions per device). Measured outputs: droplet diameter, generation rate, and regime (dripping/jetting).

- Dataset and bounded range: Observed diameters spanned 27.5–460 µm; generation rates 0.47–818 Hz. For model training a bounded range of 25–250 µm (diameter) and 5–500 Hz (rate) was used, comprising 888 points (474 dripping, 414 jetting).

- Machine learning models: Multi-layer feed-forward neural networks were built for (i) regime classification and (ii) regression of diameter and rate in each regime, yielding four regression models (dripping diameter, dripping rate, jetting diameter, jetting rate) plus one classifier. Inputs were eight design parameters (six geometry plus two flow condition descriptors); outputs were regime, diameter, or rate. Networks used ReLU activations, Adam optimizer, dropout, early stopping, and cross-validation. Train/test split: 80/20; performance reported over 10 randomized runs.

- Redundancy via conservation of mass: In addition to predicting both diameter and rate separately, an inferred diameter was computed from predicted rate using Qd = (π/6) D^3 F (mass conservation), enabling cross-checking and avoidance of design regions where either model underperforms.

- Baseline comparison: Predictions were compared to prior scaling laws for flow-focusing to assess accuracy.

- Generalization studies: • Unseen parameter prediction: Six new flow conditions were tested to evaluate out-of-sample performance. • Data-reduction: Trained with sub-sampled datasets (50–325 per regime) to assess minimal dataset size required. • Neural Optimizer: A web-based automated ML tool allowing users to upload datasets and auto-train optimized neural networks without ML expertise. • Transfer learning: Pre-trained models (on DI water/NF 350) were fine-tuned to small datasets for new fluids: (1) LB media + NF 350 (36 points; both regimes), (2) DI water + light mineral oil + 2% Span 80 (18 points; dripping only). Early layers were frozen; later layers fine-tuned.

- Design automation algorithm: Starting from the closest dataset point to the target performance, iterative parameter perturbations (± step across 8 parameters; 16 candidates/iteration) were evaluated via predictive models to minimize a cost function: C(x) = |D_des − D_pred| + |F_des − F_pred| + |D_des − D_inf|, where D_inf is the inferred diameter from Qd and F_pred. Iterations continued until convergence within model tolerance.

- Tolerance and sensitivity analysis: Using variance-based (Sobol) sensitivity analysis with quasi-Monte Carlo sampling over user-specified parameter tolerances, total-effect indices identified principal contributors to performance variability. Heatmaps and flow-rate adjustment curves were generated to guide correction of deviations.

- Case study—single-cell encapsulation: The tool integrated Poisson-based cell loading calculations using predicted generation rate and dispersed flow rate to compute required cell concentration. A constrained design (aspect ratio and inlet constraints) for 50 µm droplets at 150 Hz was fabricated and tested with 10 µm fluorescent beads to validate encapsulation statistics.

- Implementation and availability: Models and tools were implemented in Python (NumPy, Pandas, Scikit-learn, Keras; Flask for web back-end; Bootstrap/jQuery front-end). Data and code are publicly available (DAFD and Neural Optimizer GitHub repositories; datasets at dafdcad.org).

- Dataset and regimes: 998 experimental points across 43 geometries; droplet diameters 27.5–460 µm; generation rates 0.47–818 Hz; 561 dripping and 437 jetting events. Models trained on bounded set of 888 points.

- Regime classification: Accuracy 95.1 ± 1.5% on held-out 20% test sets (N=10 splits).

- Performance prediction (20% test sets, mean ± SD over 10 runs): • Dripping diameter: R² = 0.893 ± 0.029; RMSE 13.1 ± 1.6 µm; MAPE 11.2 ± 1.3%; MAE 9.9 ± 1.2 µm. • Jetting diameter: R² = 0.966 ± 0.010; RMSE 8.2 ± 1.3 µm; MAPE 4.8 ± 0.5%; MAE 5.9 ± 0.8 µm. • Dripping rate: R² = 0.889 ± 0.026; RMSE 31.7 ± 5.8 Hz; MAPE 33.5 ± 4.2%; MAE 19.6 ± 2.7 Hz. • Jetting rate: R² = 0.956 ± 0.009; RMSE 21.9 ± 2.8 Hz; MAPE 15.8 ± 2.9%; MAE 15.4 ± 2.1 Hz.

- Consistency with conservation of mass: Percentage errors for rate were approximately triple those for diameter, consistent with F ∼ D⁻³.

- Comparison to scaling laws: Previously proposed scaling laws underperformed relative to neural networks for both diameter and rate prediction.

- Unseen conditions: For six new flow conditions, regime prediction 100% accurate; diameter MAE 5.41 µm (MAPE 7.01%); rate MAE 38.1 Hz (MAPE 24.2%).

- Data reduction: Approximately 250–300 informative points (dripping) and 200–250 (jetting)—about 500 total—achieved accuracy similar to training on the full bounded set (710 train points).

- Neural Optimizer: AutoML-trained models achieved accuracy comparable to manually optimized networks on the large dataset.

- Transfer learning: Training from scratch on small datasets overfit and generalized poorly; fine-tuning pre-trained models markedly improved test accuracy for LB media (36 points, two regimes) and light mineral oil (18 points, one regime), with better performance when fluid properties were closer to the original dataset (LB vs DI water).

- Design automation validation: Specifying only diameter (25–200 µm) yielded MAE 4.3 µm (MAPE 5.0%), max deviation 12.3 µm. Joint diameter–rate targets: for 100 µm, MAE 4.44 µm (4.4%) and 33 Hz (12.2%); for 75 µm, 4.36 µm (5.8%) and 33 Hz (14.4%); for 50 µm, 3.92 µm (7.8%) and 41.3 Hz (38.8%), with the largest error at extremely low flow rates. Excluding this extreme, overall MAE 3.7 µm (4.2%) and 32.5 Hz (11.5%).

- Tolerance analysis: Sobol sensitivity identified design- and objective-specific principal parameters; predicted performance vs. flow-rate curves support in-situ correction for fabrication and pump tolerances.

- Case study: Constrained design targeting 50 µm and 150 Hz achieved 46.3 µm at 167 Hz; observed single- and double-bead encapsulation closely followed Poisson expectations at a computed input concentration of 922.8 beads/µl.

The study demonstrates that data-driven models can accurately capture the complex, multi-parameter dynamics of flow-focusing droplet generation, addressing a key barrier to the broader adoption of droplet microfluidics. High predictive accuracy across regimes and generalization to unseen conditions enables DAFD to translate target performance into actionable designs, reducing iterative experimentation. The redundancy introduced by predicting both diameter and rate and enforcing consistency through an inferred diameter strengthens robustness and allows design-automation accuracy to exceed that of any single predictor. By quantifying the effects of fabrication and pumping tolerances and providing flow-rate adjustment guidance, the tool supports reliable operation. The extension pathways—AutoML for large datasets and transfer learning for small datasets—allow the community to adapt the framework to new fluids, enhancing generalizability and reducing data requirements when baseline models exist. Compared to analytical scaling laws, the ML approach provides superior accuracy while retaining physical plausibility consistent with conservation laws. Collectively, these advances lower expertise and resource barriers and enable integration with broader microfluidic CAD workflows.

The authors developed DAFD, an open-source, web-based design automation platform that leverages machine learning to predict and deliver user-specified droplet diameters and generation rates for flow-focusing devices with high accuracy. Using a 998-point experimental dataset, neural networks achieved strong performance for regime classification and regression, outperformed existing scaling laws, generalized to unseen conditions, and enabled iterative design to meet targets. Community-extensible mechanisms via Neural Optimizer and transfer learning demonstrated that accurate models can be trained on new fluid combinations, even with limited data. Additional capabilities include tolerance-aware performance prediction and automated single-cell encapsulation design. Future work includes expanding supported fluid systems through community datasets, integrating with end-to-end microfluidic CAD and behavioral models for droplet routing, and applying the framework to other microfluidic and non-microfluidic components to achieve broader design automation of complex physical phenomena.

- Current base models were trained on a single fluid pair (DI water with NF 350 mineral oil + 5% Span 80); transfer learning requires more data as fluid properties diverge (e.g., light mineral oil vs NF 350).

- Accuracy for generation rate is inherently more sensitive than diameter (per conservation of mass), and errors can be higher at extreme operating points, especially at very low flow rates susceptible to experimental artifacts (syringe/tubing compliance, pump accuracy).

- The bounded training range (25–250 µm; 5–500 Hz) excludes larger/smaller droplets and very low/high rates where data were sparse; predictions outside this range are not supported.

- Fabrication and testing variabilities (e.g., surface chemistry, minor geometric deviations) may affect performance and require flow-rate adjustments despite tolerance guidance.

- The models rely on standardized parameter normalization and dataset formatting; deviations may affect transferability without re-normalization/retuning.

Related Publications

Explore these studies to deepen your understanding of the subject.