Medicine and Health

Effectiveness of transfer learning for enhancing tumor classification with a convolutional neural network on frozen sections

Y. Kim, S. Kim, et al.

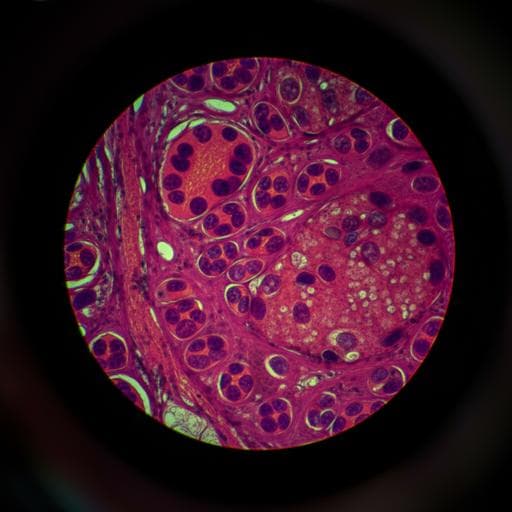

The study addresses whether transfer learning from large public datasets can enhance tumor classification performance on intraoperative frozen-section sentinel lymph node whole-slide images (WSIs) when labeled data are limited. Accurate intraoperative metastasis confirmation is time-critical yet challenging due to frozen-section artifacts and the scarcity of expert-labeled data. Convolutional neural networks (CNNs) typically require large labeled datasets; transfer learning can mitigate data scarcity by initializing models with representations learned from related domains. The authors evaluate transfer learning from ImageNet (natural images) and CAMELYON16 (FFPE pathology WSIs for metastasis detection) versus training from scratch, with the goal of improving patch- and slide-level tumor classification on frozen-section WSIs and validating generalization on an external dataset.

CNN-based computer-aided diagnosis has achieved expert-level performance in multiple medical imaging tasks (e.g., radiography, dermatology, ophthalmology). In pathology, deep learning has been applied to mitosis detection, breast cancer metastasis detection, and segmentation of colon glands and breast cancer regions, as well as lung cancer subtyping. Transfer learning from ImageNet is widely used in histopathology to accelerate convergence and improve performance with limited labels, including in the CAMELYON challenges where ImageNet-pretrained backbones are common. However, prior studies had not specifically validated transfer learning from a domain-specific pathology dataset (CAMELYON16 FFPE) to the frozen-section domain for metastasis classification. This work fills that gap by systematically comparing scratch, ImageNet-pretrained, and CAMELYON16-pretrained initializations across varying training data sizes.

Study design: Three initializations were compared: (1) scratch (random), (2) ImageNet-pretrained, and (3) CAMELYON16-pretrained. Models were trained on varying fractions of the AMC training set: 2%, 4%, 8%, 20%, 40%, and 100% corresponding to 3, 6, 12, 25, 50, and 157 WSIs, respectively. Performance was evaluated at patch- and slide-level on AMC and at slide-level on an external SNUBH dataset using sensitivity, specificity, accuracy (at threshold 0.5 for patch-level), and AUC. Statistical AUC comparisons used Hanley & McNeil with MedCalc. Data: AMC frozen-section WSIs: 297 sentinel lymph node slides scanned on Pannoramic 250 FLASH (MIRAX .mrxs). Split: 157 train, 40 validation, 100 test. SNUBH frozen-section WSIs: 228 slides scanned on Pannoramic 250 FLASH II; used solely for external validation. CAMELYON16 FFPE dataset: 270 training WSIs; split 216 train and 54 validation to train the CAMELYON16-based pretrained model. Demographics and class distributions are provided (e.g., AMC training set all female; metastasis present >2 mm: 43.3% in AMC training). Reference standard: In AMC, WSIs were manually segmented by one rater and confirmed by two expert breast pathologists (6 and 20 years experience). In SNUBH, one rater annotated and an expert (15 years) confirmed. Regions of metastatic carcinoma larger than 200 µm in greatest dimension were annotated. Patch extraction: Tissue masks were computed using Otsu thresholding on combined H and S channels. Random 448×448 patches at level 0 (~100 µm^2 field of view) were sampled within tissue. Tumor patches contained >80% tumor; non-tumor patches had 0% tumor. AMC training patch counts for the ratios: 12K, 25K, 52K, 101K, 199K, and 519K. AMC validation and test sets had 92K and 27K patches, respectively, at a ~1:1.2 tumor:non-tumor ratio. CAMELYON16 produced 330K tumor and 88K non-tumor patches (train+val) at ~1:1.2 ratio. Model and training: Inception v3 (48 layers) was used for classification. Common training settings across all models: optimizer SGD (lr 5e-4, momentum 0.9), binary cross-entropy loss, data augmentation (zoom 0.2, rotation 0.5, width/height shift 0.1, horizontal/vertical flips), dropout 0.5, and stain normalization. Best checkpoints selected by lowest validation loss. Slide-level inference: Confidence maps were generated by interpolating per-patch tumor confidences over WSIs with stride 320 pixels; 3×3 Gaussian filtering reduced noise. The maximum confidence per slide was used for ROC/AUC computation. Statistical evaluation: AUCs were compared using the Hanley & McNeil method relative to the CAMELYON16-based model within each training ratio. P-values were reported with thresholds p<0.05 and p<0.0005. Additional experiments: Learning-rate sweep (5e-2 to 5e-6) assessed AUC sensitivity at ratios 20%, 40%, and 100%.

- Training dynamics: Scratch models failed to learn with ≤8% of training data (flat validation loss/accuracy). CAMELYON16-initialized models converged faster (lower loss, higher accuracy from first epoch) and achieved better final validation metrics than scratch with ≤40%. With 100% data, ImageNet and CAMELYON16 showed similar training curves and both outperformed scratch.

- AMC patch-level AUCs (by CAMELYON16-based across ratios): 2% 0.843, 4% 0.881, 8% 0.895, 20% 0.912, 40% 0.929, 100% 0.944. CAMELYON16 pretraining significantly outperformed scratch and ImageNet at all ratios except ImageNet at 100% (ImageNet 0.943 vs CAM 0.944; comparable).

- AMC slide-level AUCs (CAMELYON16-based): 2% 0.814, 4% 0.874, 8% 0.873, 20% 0.867, 40% 0.878, 100% 0.886. CAMELYON16 significantly higher than scratch and ImageNet except ImageNet at 40% and 100%, where performance was comparable/high.

- External SNUBH slide-level AUCs: ImageNet-based 0.592, 0.625, 0.667, 0.723, 0.819, 0.798 for 2–100%; CAMELYON16-based 0.689, 0.667, 0.695, 0.763, 0.749, 0.804; scratch 0.437–0.540. CAMELYON16-based exceeded ImageNet-based across all ratios except at 40%.

- Grad-CAM examples showed CAMELYON16-based models localized tumor regions more confidently with limited data: at 4% data, CAM 0.82 confidence correctly classified a tumor patch vs scratch 0.49 and ImageNet 0.36 (both incorrect).

- Learning rate sensitivity: lr 5e-4 to 5e-5 yielded strongest AUCs across initializations; ≥5e-3 often diverged; ≤5e-5 required more epochs and converged to higher loss with reduced AUC. Representative AMC metrics at threshold 0.5 (selected): e.g., ImageNet 100%: Sensitivity 0.660, Specificity 0.968, Accuracy 0.828, AUC 0.943; CAMELYON16 100%: Sensitivity 0.656, Specificity 0.969, Accuracy 0.827, AUC 0.944.

The findings confirm that transfer learning, especially from a domain-specific pathology dataset (CAMELYON16), substantially improves CNN performance for tumor classification on frozen-section WSIs when labeled data are scarce. CAMELYON16 pretraining provided better feature initialization than ImageNet under limited data, yielding higher AUCs and faster convergence, and enabling successful training where scratch models failed (≤8%). With full training data, ImageNet and CAMELYON16 pretraining achieved comparable high performance, indicating diminishing relative benefit as in-domain data increase. External validation on SNUBH exhibited lower AUCs overall, likely due to domain shift, notably differences in micrometers-per-pixel (MPP: AMC 0.24 vs SNUBH 0.50), effectively halving resolution for equivalent patch sizes in SNUBH. Stronger resizing augmentation was employed to mitigate this gap, and AUCs improved with more training data. These results support the practical value of leveraging FFPE-trained models to bootstrap frozen-section classifiers, reducing annotation burden and training time while maintaining or improving diagnostic performance, especially in low-data regimes.

Transfer learning using a CAMELYON16-pretrained model enhances CNN-based tumor classification on intraoperative frozen-section sentinel lymph node WSIs, particularly when training data are limited (≤40% of AMC training set). CAMELYON16-based models achieved significantly higher patch- and slide-level AUCs than scratch-based models across all data ratios and surpassed ImageNet-based models in low-data settings, while reaching parity at full data. This demonstrates that domain-relevant pretraining from FFPE pathology can effectively transfer to frozen-section tasks. Future work should explore more robust slide-level aggregation methods beyond maximum confidence, domain adaptation to address scanner/MTP differences, and investigation of alternate patch selection criteria and multi-scale modeling.

- Patch selection criterion defined tumor patches as >80% tumor and non-tumor as 0%, potentially producing models tailored to highly pure patches; different thresholds may affect characteristics and generalizability.

- Slide-level classification used the maximum value from the confidence map, a simplistic aggregator sensitive to noise; more robust methods (e.g., machine learning with local/global features) may improve slide-level performance.

- External validation performance was affected by inter-institutional domain shifts (e.g., MPP differences), indicating a need for explicit domain adaptation.

- Scratch models failed with very small datasets (≤8%), limiting comparison in that regime.

Related Publications

Explore these studies to deepen your understanding of the subject.