Medicine and Health

Deep self-learning enables fast, high-fidelity isotropic resolution restoration for volumetric fluorescence microscopy

K. Ning, B. Lu, et al.

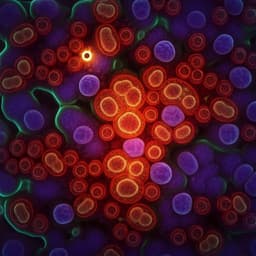

Volumetric fluorescence microscopy typically suffers from anisotropic spatial resolution due to the optical point spread function, where axial resolution is 2–3 times worse than lateral resolution. This anisotropy impairs visualization and analysis of complex 3D biological structures and leads to errors in tasks such as whole-brain single-neuron morphology reconstruction. While sophisticated hardware can achieve isotropy, such systems are complex and not widely applicable. Data-driven deep learning approaches are promising but face challenges: supervised training requires well-registered isotropic ground truth, which is hard to obtain; synthetic training depends on accurate PSF modeling; and unpaired image-to-image translation (e.g., CycleGAN/OT-CycleGAN) can introduce hallucinations and distortions. The research question addressed here is how to restore high-fidelity, isotropic 3D images from standard anisotropic fluorescence microscopy data without paired ground truth, minimizing artifacts and maintaining computational efficiency. The study proposes Self-Net, a self-learning deep method that leverages high-resolution lateral slices within the same dataset to improve axial resolution, aiming to provide fast, accurate, and generalizable isotropic recovery across imaging modalities, including for challenging applications like whole-brain imaging and super-resolution microscopy.

The paper situates its contribution within several strands of prior work: (1) Hardware-based isotropic 3D imaging methods exist but are complex and have limited accessibility. (2) Deep learning has effectively addressed various microscopy limitations; typical supervised strategies (e.g., CARE) require accurately paired data or realistic semi-synthetic data derived from precise PSF estimates, which are difficult to obtain or model. (3) Unpaired learning with CycleGAN enables domain translation without pairs, and OT-CycleGAN has been applied to isotropy restoration; however, training large 3D CycleGANs is memory- and time-intensive and, due to weak cycle-consistency constraints, can yield hallucinations, artifacts, and structural distortions, which are unacceptable for biomedical analysis. This work proposes a self-learning approach that avoids these pitfalls by learning realistic degradation unsupervised and enforcing supervised, pixel-wise constraints for recovery.

Self-Net is a two-stage self-learning framework that operates on a single raw 3D anisotropic stack, treating it as sets of 2D lateral and axial slices. Stage 1 (unsupervised degradation modeling): Unpaired lateral and axial slices from the same volume are used with a CycleGAN to learn the transformation from high-resolution lateral images to blurred axial images (learning degradation rather than direct deblurring to avoid hallucinations). Lateral images are downsampled along lateral axes to reduce the resolution gap with axial slices, encouraging the network to learn anisotropic PSF effects. The CycleGAN includes discriminators for lateral and axial domains and forward/backward generators to produce synthetic blurred axial images from downsampled lateral inputs. Stage 2 (supervised isotropic recovery): The synthetic blurred axial images generated in Stage 1 are paired with their pixel-aligned high-resolution lateral counterparts to train a deblurring/restoration network (DeblurNet) using pixel-wise reconstruction losses, imposing strong constraints to ensure high-fidelity isotropic recovery. Alternative optimization: Training proceeds with feedback between stages—performance of DeblurNet informs Stage 1 to generate more realistic blurred images. After convergence, testing performs isotropic restoration by applying DeblurNet to all axial slices of the original stack. Validation datasets and protocols: (a) Simulated fluorescence beads: isotropic ground truth convolved with a theoretical wide-field 3D PSF (lateral FWHM 305 nm, axial FWHM 1000 nm) to create anisotropic inputs for training and evaluation. (b) Synthetic tubular volumes following prior work (ref. 26) for structural fidelity assessment with RMSE/SSIM metrics. (c) Semisynthetic neuronal data with known PSF for comparing unsupervised Self-Net to supervised CARE under ideal modeling. (d) Real biological datasets acquired on multiple platforms (wide-field, two-photon, confocal, light-sheet) across diverse tissues (mouse liver, kidney, brain vasculature, neurons), with Fourier spectrum and FWHM analyses for isotropy assessment. (e) Super-resolution modalities: 3D STED bead imaging under different depletion power allocations, with Richardson–Lucy deconvolution (5 iterations) pre-processing; and instant SIM datasets to test applicability. Computational considerations: Compared to OT-CycleGAN’s large 3D networks, Self-Net uses substantially fewer parameters, and achieves faster training and inference on a single GPU (GeForce RTX 3090).

- Self-Net accurately restores isotropic resolution from anisotropic volumetric fluorescence data without paired ground truth by leveraging lateral slices as rational targets and combining unsupervised degradation learning with supervised recovery.

- On simulated beads, Self-Net removed axial blur and produced restorations closely matching isotropic ground truth, outperforming OT-CycleGAN which showed shape/intensity deviations.

- On synthetic tubular volumes, Self-Net yielded higher-fidelity reconstructions with notably better image quality metrics (lower RMSE, higher SSIM) compared to OT-CycleGAN.

- Model efficiency: Self-Net has about 10× fewer parameters than OT-CycleGAN and is approximately 20× faster in training and 5× faster in inference (evaluated on a 700-voxel volume, RTX 3090).

- On semisynthetic neuronal data, unsupervised Self-Net achieved image quality comparable to supervised CARE, with similar RMSE/SSIM, indicating effective anisotropy correction without paired data.

- Broad applicability demonstrated on real biological datasets (mouse liver, kidney, brain vasculature, neurons) acquired via wide-field, two-photon, confocal, and light-sheet microscopes, with clear axial resolution improvements and enhanced visualization of fine structures (e.g., glomerular tufts, dendritic spines). Fourier spectrum and FWHM analyses confirmed improved isotropy.

- Robustness to depth-related issues: Self-Net handled signal attenuation and depth-varying PSF to improve isotropy (as shown in supplementary analyses).

- Super-resolution STED: With 100% Z-depletion (Z-STED), resolutions were 97.3 ± 5.7 nm (lateral) and 82.0 ± 5.1 nm (axial). With 70% XY / 30% Z depletion, resolutions were 49.8 ± 2.3 nm (lateral) and 133.2 ± 6.7 nm (axial). After Self-Net processing, nearly isotropic resolutions were achieved: 49.6 ± 1.4 nm (lateral) and 58.7 ± 2.5 nm (axial), corresponding to ~2× lateral and ~1.4× axial improvements over conventional Z-STED.

- Self-Net enabled isotropic whole-brain imaging at 0.2 × 0.2 × 0.2 µm³ voxel size (DeepIsoBrain), facilitating accurate and efficient single-neuron morphology reconstruction.

The study addresses the core challenge of anisotropic resolution in 3D fluorescence microscopy by reframing the learning problem: instead of directly translating anisotropic to isotropic images (which risks hallucinations), Self-Net first learns realistic anisotropic degradation unsupervised and then enforces supervised, pixel-wise constraints for recovery. This design yields high-fidelity results that preserve biological structures, directly tackling the need for accuracy in applications like neuron tracing where false links arise from poor axial resolution. Performance gains over OT-CycleGAN (fewer artifacts, higher fidelity, far smaller/faster models) demonstrate the advantage of the two-stage self-learning strategy. Comparable performance to CARE without paired data shows that the method can bypass the practical bottleneck of acquiring registered isotropic ground truth or perfect PSF models. Validations across diverse tissues and imaging modalities, including super-resolution (STED, iSIM), highlight generalizability. The ability to achieve isotropic whole-brain imaging at submicron voxels is particularly impactful for morphology-based neuroscience, reducing reconstruction errors and improving downstream analyses.

Self-Net introduces a general, fast, and high-fidelity self-learning framework for isotropic restoration of volumetric fluorescence microscopy, operating without paired training data. By combining unsupervised modeling of anisotropic degradation with supervised isotropic recovery, it suppresses hallucinations and achieves superior accuracy and efficiency compared to prior unpaired methods, while matching supervised baselines under ideal conditions. The approach generalizes across wide-field, laser-scanning, light-sheet, and super-resolution modalities and enables isotropic whole-brain imaging at 0.2 µm isotropic voxels, substantially improving single-neuron reconstruction. Future work could target handling even more severe anisotropy (>4× lateral–axial gaps), improving robustness to complex, depth-varying PSFs and signal attenuation, integrating adaptive or physics-informed degradation models, and expanding validation across additional specimen types and imaging systems.

Like other deep-learning approaches, performance degrades as resolution anisotropy increases; when the lateral–axial resolution gap exceeds approximately 4×, deterioration becomes particularly evident. The method relies on sufficient quality lateral slices within the same dataset and assumes that degradation from lateral to axial can be learned accurately; extreme noise, severe attenuation, or highly nonstationary PSFs may challenge modeling. Complete affiliation mappings for all contributing institutions are not provided in the excerpt.

Related Publications

Explore these studies to deepen your understanding of the subject.