Medicine and Health

Cumulative learning enables convolutional neural network representations for small mass spectrometry data classification

K. Seddiki, P. Saudemont, et al.

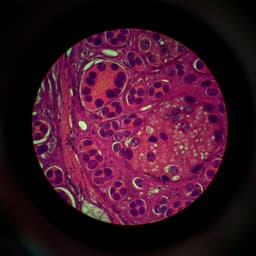

The study addresses the challenge of rapidly and accurately classifying clinical samples (e.g., cancer tissues, infectious microorganisms) using mass spectrometry (MS) data when only small training datasets are available. Conventional machine learning pipelines (e.g., SVM, RF, LDA) require extensive, sensitive preprocessing (alignment, baseline correction, normalization, peak picking, dimensionality reduction), which is laborious, dataset-specific, and can compromise reproducibility and biological validity. Convolutional neural networks (CNNs) can learn directly from raw spectra, mitigating the need for preprocessing, but tend to underperform with limited training data—a common constraint in medical applications. The research question is whether transfer learning and a proposed cumulative learning strategy with 1D-CNNs can yield robust, generalizable spectral representations that enable high-accuracy classification across diverse small MS datasets, instruments, and biological contexts. The purpose is to evaluate transfer learning from a larger source MS dataset and to introduce cumulative learning by sequentially training on multiple tasks to accumulate generic MS knowledge, thereby improving accuracy on small, heterogeneous clinical datasets. The importance lies in enabling rapid, preprocessing-free, accurate MS-based diagnosis workflows applicable across platforms and sample types.

Prior work has applied standard ML models (SVM, RF, LDA) to MS data for cancer and pathogen identification, but these require complex, multi-step preprocessing (e.g., intensity transformations, baseline subtraction, alignment, normalization, peak picking, feature selection) with no consensus on optimal methods or parameterization. Preprocessing variability can bias results and limit generalizability across instruments and conditions. Peak shifts, intensity fluctuations, and baseline drift pose alignment and normalization challenges; overcorrection can distort peaks, and normalization assumptions may not hold in heterogeneous tissues. CNNs have shown success extracting spatially invariant features and working on raw biosignals, with extensive promise in computer vision and 2D MS imaging; however, transfer learning for 1D-MS spectral data remains underexplored due to scarcity of large labeled 1D spectral datasets. Limited 1D spectroscopy CNN studies (e.g., vibrational, NIR, Raman) exist, but none have demonstrated transfer learning across heterogeneous 1D-MS datasets. This work builds on image-based transfer learning insights (e.g., ImageNet features generalizing to medical imaging) by learning potentially generic 1D-MS representations and extends this with cumulative learning across multiple datasets and tasks.

Datasets: Six independent MS datasets were used. Target-domain clinical datasets: (1) SpiderMass canine sarcoma (12 classes: healthy plus 11 sarcoma histologies; 2228 spectra from 33 biopsies; Synapt G2-S Q-TOF; 100–1600 Da; 15,000 features after 0.1 Da binning), (2) SpiderMass microorganisms (five species across yeast, Gram+ and Gram−; 117 spectra; Synapt G2-S Q-TOF; 100–2000 Da; 19,000 features for transfer learning and 15,000 for cumulative learning). Source and intermediate datasets: (3) MALDI-MSI rat brain (gray vs white matter; 10,100 spectra; Rapiflex MALDI-TOF; 300–1300 Da; binned to 19,000, 15,000, or 7084 features to match targets), (4) Beef liver (positive and negative modes; 2637 spectra; Synapt G2-S Q-TOF; 100–1600 Da; 15,000 features). External validation datasets: (5) Human ovary 1 high-resolution SELDI/QSTAR (healthy vs cancer; 216 spectra; 1–20,000 Da; 7084 features), (6) Human ovary 2 low-resolution SELDI/PBSII (healthy vs cancer; 253 spectra; 700–12,000 Da; 7084 features). Data processing and splitting: No preprocessing or feature selection was applied for CNNs beyond binning and linear scaling to [0,1]. Datasets were split into training/validation/test sets at 60/20/20 with stratification, within a 5-fold cross-validation framework; results report mean accuracy over 10 independent iterations. Class imbalance was addressed via loss weighting. Pearson and cosine correlations indicated low intra-source correlations for most classes, supporting biopsy-agnostic splitting; healthy canine spectra were highly correlated. CNN architectures: Three 1D-CNN variants adapted from prominent 2D architectures were evaluated: variant_Lecun (4 layers), variant_LeNet (5 layers), and variant_VGG9 (9 layers). Convolution and pooling operations were adapted to 1D, filter widths enlarged for spectral features, and zero padding omitted due to fixed-length spectra. Fully connected (FC) layers concluded with sigmoid (binary) or softmax (multi-class). He-normal initialization was used with ReLU/Leaky ReLU activations. Model checkpoints saved based on validation accuracy improvements. Training protocols:

- From scratch: CNNs trained directly on target datasets.

- Transfer learning: Train CNNs on rat brain; freeze convolutional (representation) layers; replace and train decision layers (FC + output) on target datasets (canine sarcoma 12-class; microorganisms 3- and 5-class; human ovary 1).

- Cumulative learning Scenario A: Train on rat brain; fine-tune representation on beef liver; freeze representation; train new decision layers on target (canine sarcoma 12-class or human ovary 2).

- Cumulative learning Scenario B: Train on rat brain; fine-tune on beef liver; fine-tune on microorganisms; then freeze all but last conv layer; train new last conv and decision layers on canine sarcoma 12-class. Freezing strategies were compared; best results often came from freezing all conv layers and retraining FC layers, except Scenario B where freezing all but last conv layer was optimal. Additional experiments with heavier binning (feature count 1500) showed deeper networks (variant_VGG9) performing better on more compressed data. Baselines for comparison: SVM, RF, and LDA were trained on (i) raw binned data and (ii) preprocessed data (log transform; SNIP baseline; TIC normalization; class-wise cubic warping alignment; peak detection via MAD). Dimensionality reduction used chi-square for SVM/RF and PCA before LDA. Hyperparameters were grid-searched (see supplementary) and best settings used for evaluation. Metrics included accuracy, sensitivity, specificity, and confusion matrices.

- From-scratch CNN performance on small datasets (Table 1):

- Canine sarcoma (binary healthy vs cancer): variant_Lecun accuracy 0.98 ± 0.00; best among architectures.

- Canine sarcoma (12-class): variant_VGG9 0.96 ± 0.01; others lower; confusion errors spread across classes.

- Microorganisms: 3-class (yeast/Gram+/Gram−): best 0.91 ± 0.03 (variant_Lecun); 5-class: 0.89 ± 0.02 (variant_Lecun). Deeper models overfit.

- Transfer learning from rat brain (Table 2) markedly improved performance:

- Canine sarcoma 12-class: up to 0.93 ± 0.02 (variant_VGG9), gains vs scratch across architectures.

- Microorganisms 3-class: up to 0.99 ± 0.00 (variant_Lecun); 5-class: up to 0.99 ± 0.00 (variant_Lecun). Large accuracy gains relative to scratch.

- Cumulative learning further improved canine sarcoma 12-class (Table 3):

- Scenario A (rat brain → beef liver → canine sarcoma): up to 0.95 ± 0.01 (variant_LeNet), improving over transfer learning.

- Scenario B (rat brain → beef liver → microorganisms → canine sarcoma): best 0.99 ± 0.00 (variant_LeNet), with incremental gains over Scenario A and transfer learning, despite many classes and dataset heterogeneity.

- Best freezing strategy generally: freeze all conv layers and retrain FC; Scenario B optimal with all-but-last conv layer trainable.

- Generalizability of cumulative representation: The final Scenario B representation (variant_LeNet) tested back on rat brain, beef liver, and microorganisms retained 0.98–0.99 accuracy, indicating no loss of generalization and suggesting a generic MS representation.

- External human ovary datasets (Table 4) with variant_LeNet:

- Ovary 1 (high-res SELDI): scratch 0.78 ± 0.02; transfer 0.98 ± 0.00 (best); cumulative 0.83 ± 0.02.

- Ovary 2 (low-res SELDI): scratch 0.80 ± 0.00; transfer 0.83 ± 0.02; cumulative (Scenario A) 0.99 ± 0.00 (best).

- CNNs vs conventional ML (Table 5):

- On raw data, CNNs decisively outperformed SVM, RF, and LDA across all datasets (e.g., microorganism 3-/5-class 0.99 ± 0.00 vs LDA 0.90 and RF 0.84; canine binary 0.98 vs RF 0.96, SVM 0.77, LDA 0.71).

- On preprocessed data, RF or LDA sometimes best among conventional methods (e.g., canine binary RF 0.96 ± 0.01; ovary 2 LDA 0.96 ± 0.00), yet CNNs still matched or exceeded best conventional results while avoiding preprocessing.

- Data compression effect: With smaller feature counts (bin size 1 Da, ~1500 features), deeper architecture (variant_VGG9) surpassed shallower models on canine sarcoma, indicating depth benefits when input dimensionality is reduced.

- Data augmentation attempts (adding noise/baseline/misalignment) yielded <60% accuracy, suggesting synthetic technical variations are insufficient without modeling biological variability.

The findings demonstrate that 1D-CNNs can effectively classify raw MS spectra without preprocessing, leveraging convolutional filters and pooling to learn robust local peak patterns and handle intensity variation and misalignment. However, with small datasets, training from scratch is inadequate. Transfer learning from a large, unrelated MS dataset (rat brain MALDI-MSI) provides generic spectral representations that substantially improve accuracy on small target tasks, even across different organisms, ionization methods, instruments, resolutions, and mass ranges. The cumulative learning strategy—sequentially fine-tuning the same representation across multiple intermediate tasks—further accumulates MS knowledge, yielding near-perfect multi-class performance (0.99 accuracy) on a challenging 12-class canine sarcoma task and high performance on low-resolution ovary data. Importantly, the cumulative representation retains high performance on earlier tasks, supporting the existence of a generic, cross-domain MS representation resilient to biological and instrumental variability. Compared to conventional ML, CNNs provide an end-to-end, faster alternative that obviates the need for complex, dataset-specific preprocessing and feature engineering, and they perform well even when standard algorithms require careful alignment and normalization. These advances can enable rapid, robust clinical decision support from raw spectra, including potential in vivo workflows (e.g., SpiderMass), where per-spectrum inference is feasible without batch preprocessing.

This work introduces and validates cumulative learning for 1D-CNNs on mass spectrometry data, showing that sequential training across heterogeneous datasets yields a generic spectral representation that enables high-accuracy classification from raw data, even with small sample sizes. Transfer learning already boosts performance across diverse tasks; cumulative learning further improves outcomes, achieving up to 0.99 accuracy in challenging multi-class settings and on low-resolution datasets. The approach reduces dependence on preprocessing, enhances robustness to instrument and biological variability, and compares favorably to conventional ML. Future research will focus on interpreting learned representations to identify discriminative spectral regions, elucidate which MS features transfer across domains (e.g., lipids vs proteins), and extending the methodology to other spectroscopy modalities (e.g., Raman, NMR).

- Small target datasets limit from-scratch CNN training; while transfer and cumulative learning mitigate this, availability of larger labeled datasets would further enhance performance and stability.

- The study does not provide detailed interpretability of the learned features; biological attribution of discriminative m/z regions remains future work.

- Data augmentation strategies tested (technical noise, baseline, misalignment) were insufficient to model biological variability, yielding poor gains (<60% accuracy), indicating a need for better biologically informed augmentation.

- Preprocessing choices for conventional ML baselines may affect their performance; although standard pipelines were used, alternative alignment/normalization or per-class alignment strategies could yield different results.

- Some classes in canine sarcoma have few biopsies, which may limit generalizability across patients despite stratified sampling and reported low intra-source correlations.

- Cross-instrument/domain transfer was explored across selected platforms; broader validation across more instruments and settings would strengthen generality claims.

Related Publications

Explore these studies to deepen your understanding of the subject.