Engineering and Technology

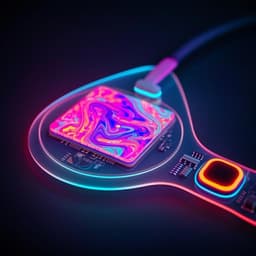

An artificial intelligence-assisted digital microfluidic system for multistate droplet control

K. Guo, Z. Song, et al.

Discover µDropAI, an innovative AI-assisted digital microfluidics framework developed by Kunlun Guo, Zerui Song, Jiale Zhou, Bin Shen, Bingyong Yan, Zhen Guo, and Huifeng Wang. This groundbreaking technology enables precise multistate droplet control, enhancing volume accuracy and offering compatibility with existing systems. Prepare for a new era in automated microfluidics!

~3 min • Beginner • English

Related Publications

Explore these studies to deepen your understanding of the subject.