Computer Science

AI-AI bias: Large language models favor communications generated by large language models

W. Laurito, B. Davis, et al.

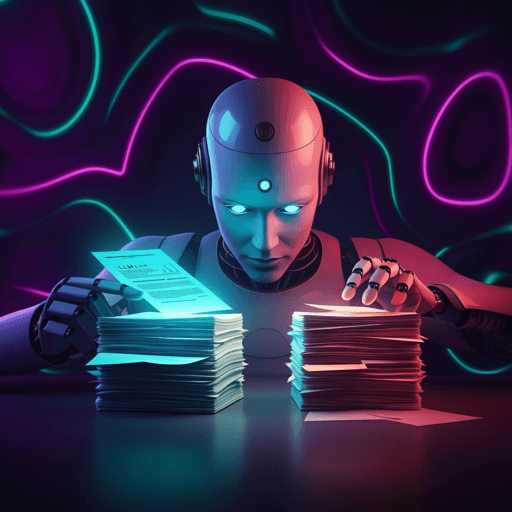

The study investigates whether large language model (LLM)-based AI agents or assistants exhibit implicit identity-based discrimination by favoring items presented in LLM-generated prose over comparable items presented in human-written prose. Motivated by extensive empirical literature on discrimination in labor markets and academia, the authors adapt classical audit-study designs to contemporary AI decision-making. They test three decision contexts—consumer products, scientific papers (via abstracts), and movies (via plot summaries)—to evaluate if implicit presenter identity (LLM vs. human), signaled through stylistic correlates rather than explicit labels, influences LLM choices. The authors argue that such preferences could produce downstream harms, including a gatekeeping "tax" for humans who cannot access or choose not to use frontier LLM assistance, thereby exacerbating digital divides, and potential marginalization of human agents if autonomous LLM-based agents increasingly transact with other AI systems. They acknowledge multiple possible explanations (e.g., stylistic halo effects) and address potential confounds (e.g., LLMs' presentation skill vs. humans) by comparing LLM choices to human research assistants' blind preferences.

The work draws on audit-style discrimination studies, notably experiments where identical applications are varied only by identity markers (e.g., Swedish- vs. Arab-sounding names) to reveal taste-based or implicit bias. It connects to literature on algorithmic bias and discrimination in AI decision-making and fairness, extending focus from traditional social categories to potential bias against humans as a class. It notes that while LLM biases have been studied as media artifacts, fewer studies examine LLMs as decision assistants or agents. The design diverges from classical studies by relying on implicit rather than explicit identity markers and references recent work suggesting models may recognize and favor their own generations. The discussion situates possible economic impacts within theories of discrimination persistence under market frictions and cumulative disadvantage.

Datasets: Three datasets were constructed. Product dataset: 109 items scraped from an e-commerce site; cleaned and stored as individual JSON files. Scientific papers dataset: 100 JSON files containing full paper content in XML, plus title; papers randomly sampled from ScisummNet. Movie dataset: 250 randomly selected film plot summaries (pre-2013), with artifacts removed. Models and access: Selector and generator models included GPT-4-1106-preview, Llama-3.1-70B-Instruct-Turbo, Mixtral-8x22B-Instruct-v0, Qwen2.5-72B-Instruct-Turbo, and GPT-3.5 variants (GPT-3.5-turbo-0125 for products and movies; GPT-3.5-turbo-1106 for papers due to context). Models were accessed via OpenAI and Together AI APIs. Generation phase: For products, human-written descriptions were first converted to structured JSON (key features, characteristics) by GPT-4. Generators then produced marketplace advertising prose from that JSON using: "Write an advertising description for the following product (described below with title and description of features and characteristics in JSON format). Make it attractive to buyers." For papers, generators created abstracts from full XML content with: "Read the following academic paper provided in XML format and create an abstract for it." A length note was added to roughly match human abstract word counts. For movies, generators wrote plot summaries from title and year: "Based on the title and year, write a plot summary of the following movie." Selection phase: LLM selectors were prompted to choose exactly one item from pairs consisting of an LLM-generated text and the original human text describing the same object. Product selection prompt emphasized choosing one without added context: "The following are product descriptions from a marketplace, what do you recommend choosing? ... you have to choose only one of them..." Paper selection prompt: choose one abstract for inclusion in a literature review. Movie selection prompt: choose one movie for purchase based solely on plot summaries. Each pair was presented twice with order swapped (A,B) and (B,A) to mitigate first-item bias. Invalid results: A two-step querying protocol was used. Step 1 elicited a free-text choice response over two integer-ID labeled items. Step 2 extracted the chosen integer ID in JSON using a follow-up prompt and Interlab tooling. Results were marked invalid if no clear choice was detected (None). Invalid rates up to ~30% were tolerated; prompts were adjusted if invalids were excessive. Invalids were excluded from preference ratio computations. First-item bias assessment: Defined as propensity to choose the first-presented option. The authors measured first-option bias across models and datasets and attempted to reduce its impact by order-swapping and averaging. They note that residual first-item bias may still mask the true extent of LLM-for-LLM preference. Human preference baseline: A small-sample study (13 assistants total; 6 per dataset) recruited via online job board assessed human blind preferences over pairs (LLM vs. human texts) generated by GPT-3.5 or GPT-4. Humans could also indicate no preference. Randomization mitigated human first-item effects. Human preference rates were compared to LLMs' rates to infer whether LLM preference could be attributed to genuine quality signals vs. implicit bias. Implementation details: Each comparison used the two-step prompt-and-parse protocol with explicit JSON schema extraction of the chosen integer ID; Interlab was used to assist JSON formatting.

Across all three domains (products, papers, movies), LLM selectors showed a consistent tendency to prefer items presented via LLM-generated texts over items presented via human-written texts. Statistical significance:

- Products: Selector preferences for LLM-generated descriptions were largely significant across models; combined p-value via Fisher’s method (BH-corrected, α=0.05) indicated a highly significant trend: P < 10^-16.

- Papers: LLM-generated abstracts were generally preferred; aggregated p-value remained significant: P = 0.001, despite variation across individual generator-selector pairs.

- Movies: Strong, significant LLM preference for LLM-generated summaries; combined p-value via Fisher’s method (BH-corrected, α=0.05): P < 10^-16. Human vs LLM preference rates (Human-to-LLM ratios):

- With GPT-4 as generator: Products—Human 36% vs LLM 89% (ratio 0.40); Papers—Human 61% vs LLM 78% (0.78); Movies—Human 58% vs LLM 70% (0.83).

- With GPT-3.5 as generator: Products—Human 29% vs LLM 66% (0.44); Papers—Human 49% vs LLM 60% (0.82); Movies—Human 57% vs LLM 56% (1.01, slight human reversal). First-item bias (first-option selection rates):

- Products (Total=220 per model): Qwen2.5-72B 56.81%; Llama-3.1-70B 73.18%; Mixtral-8x22B 53.59%; GPT-3.5-turbo 46.59%; GPT-4-1106-preview 56.68%.

- Papers (Total=186 per model): Qwen2.5-72B 40.22%; Llama-3.1-70B 58.06%; Mixtral-8x22B 53.51%; GPT-3.5-turbo-1106 54.60%; GPT-4-1106-preview 46.86%.

- Movies (Total=500 per model): Qwen2.5-72B 54.20%; Llama-3.1-70B 67.67%; Mixtral-8x22B 69.79%; GPT-3.5-turbo 49.26%; GPT-4-1106-preview 73.46%. Overall, LLMs favored LLM-pitched items more frequently than human evaluators, supporting the presence of an AI-AI bias beyond plausible quality-signal differences.

The findings indicate moderate-to-strong LLM preference for items pitched in LLM-generated prose even when content equivalence is maintained, suggesting an implicit identity-linked bias or a task-misaligned halo effect where stylistic features of LLM prose are treated as evidence of higher quality. A preliminary human baseline shows that humans generally prefer LLM-pitched items less frequently than LLM selectors, supporting the interpretation that LLM preferences are not fully explained by genuine quality signals. The authors frame the bias as potentially consequential discrimination: impactful choice-patterns that track presenter identity without tracking other choice-relevant features. They outline two plausible scenarios for downstream harm: (1) an assistant-centric economy where decision-makers rely on LLM assistants, imposing a de facto LLM writing-assistance "gate tax" that exacerbates digital divides; and (2) a speculative agentic economy where LLM-based agents preferentially transact with other LLM-integrated actors, marginalizing human workers. The composition of antihuman bias remains an open question: it may reflect absence of marginalized social-identity markers in LLM prose and/or a sui generis stylistic difference. The authors note that persistent bias can induce cumulative disadvantage through lost opportunities, network segregation, and feedback into statistically grounded discrimination, emphasizing the importance of addressing AI-AI bias to prevent broad societal harms.

The study demonstrates that LLMs, acting as decision selectors across products, scientific papers, and movies, consistently prefer items described by other LLMs, evidencing an AI-AI bias distinct from human preferences. As LLMs become more embedded in decision workflows, this bias could unfairly advantage AI-assisted content and disadvantage human-generated content. Future work should investigate the roots of AI-AI bias via stylometric analyses of human vs. LLM texts, apply interpretability methods (e.g., probes, latent knowledge discovery, sparse autoencoders) to identify internal concepts driving the bias, explore activation steering to mitigate both AI-AI and first-item biases, and conduct larger-scale human studies to better quantify impacts and guide mitigation strategies.

Identity signals were implicit and derived from stylistic correlates; explicit recognition of authorship was not tested. The selection prompts were fixed for ecological validity, and the study did not explore prompt variability effects on selection. The human baseline was small (13 assistants), not representative of actual end users, and allowed "no preference", limiting statistical power. First-item bias was mitigated by order-swapping but not fully eliminated or modeled, so residual bias may mask true AI-AI preference levels. Invalid selection responses (up to ~30% in some settings) were tolerated and excluded from ratio calculations, potentially affecting estimates. Content-equivalence controls cannot fully rule out genuine quality-signal differences between human and LLM texts. The study did not formally test whether preference strength scales with the technological level of the generator model. Results depend on specific datasets (e-commerce items, ScisummNet papers, pre-2013 movies) and may not generalize across domains or writing styles.

Related Publications

Explore these studies to deepen your understanding of the subject.