Chemistry

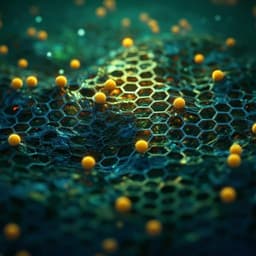

TransPolymer: a Transformer-based language model for polymer property predictions

C. Xu, Y. Wang, et al.

Discover how Changwen Xu, Yuyang Wang, and Amir Barati Farimani have leveraged a Transformer-based language model, TransPolymer, to revolutionize polymer property prediction. Their innovative approach highlights the vital role of self-attention in understanding structure-property relationships, paving the way for rational polymer design.

Playback language: English

Related Publications

Explore these studies to deepen your understanding of the subject.