Computer Science

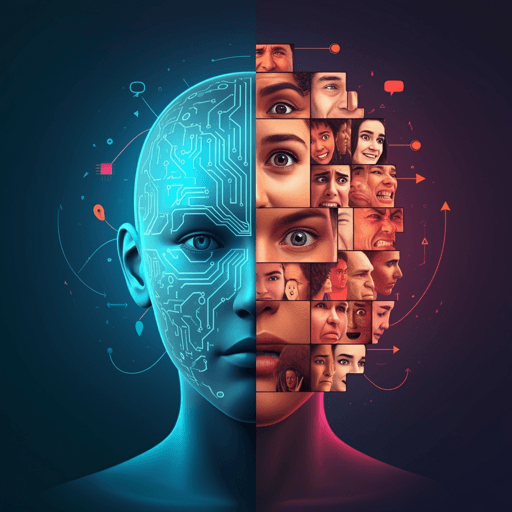

Evaluating the capacity of large language models to interpret emotions in images

H. Alrasheed, A. Alghihab, et al.

Discover how GPT-4 can streamline emotional stimulus selection by rating visual images on valence and arousal, closely approximating human judgments under zero-shot conditions while noting challenges with subtler cues. This research was conducted by Hend Alrasheed, Adwa Alghihab, Alex Pentland, and Sharifa Alghowinem.

Related Publications

Explore these studies to deepen your understanding of the subject.